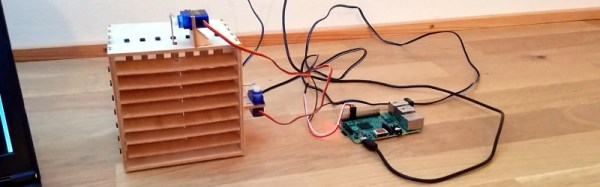

The usefulness of Raspberry Pis seems almost limitless, with new applications being introduced daily and with no end in sight. But, as versatile as they are, it’s no secret that Raspberry Pis are still lacking in pure processing power. So, some serious optimization is needed to squeeze as much power out of the Raspberry Pi as possible when you’re working on processor-intensive projects.

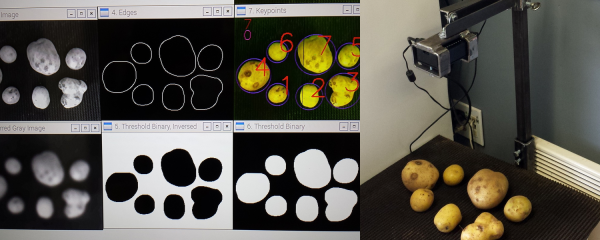

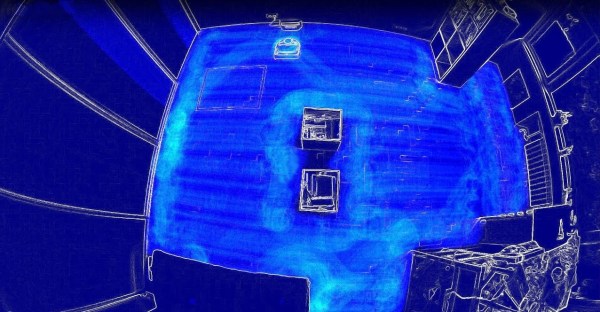

This simplest way to accomplish this optimization, of course, is to simply reduce what’s running down to the essentials. For example, there’s no sense in running a GUI if your project doesn’t even use a display. Another strategy, however, is to ensure that you’re actually using all of the available processing power that the Raspberry Pi offers. In [sagiz’s] case, that meant using Intel’s open source Threading Building Blocks to achieve better parallelism in his OpenCV project.