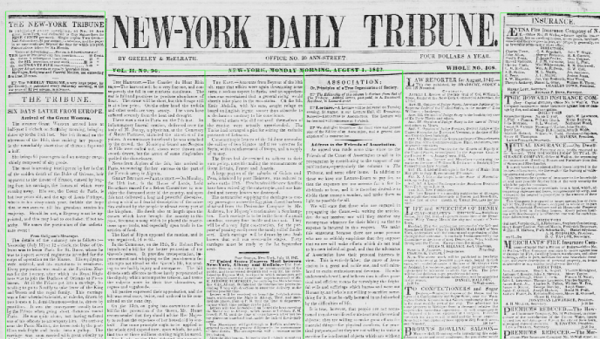

Plenty of people don’t bother to read the current newspaper, let alone editions that were published over 100 years ago. But there’s a wealth of important historical information buried in these dusty old publications, assuming you can find a way to reliably digitize and index it all. You might think the solution is as simple as running images of the paper through optical character recognition (OCR) software, but as [John Scancella] explains, the problem is a bit more complicated than that.

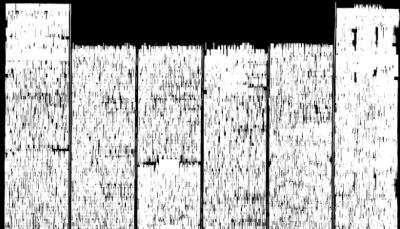

Ultimately, the issue largely comes down to formatting. The OCR software reasonably assumes all the text is in orderly horizontal lines, because in the vast majority of cases, it would be. That’s how you’re reading these words now. But as anyone who’s seen an old time newspaper knows, that’s not how things were necessarily written back then. Pages consisted of multiple narrow columns of stories separated by vertical lines; if the OCR tries to read the page from left to right, the resulting text is a mishmash of several unrelated topics.

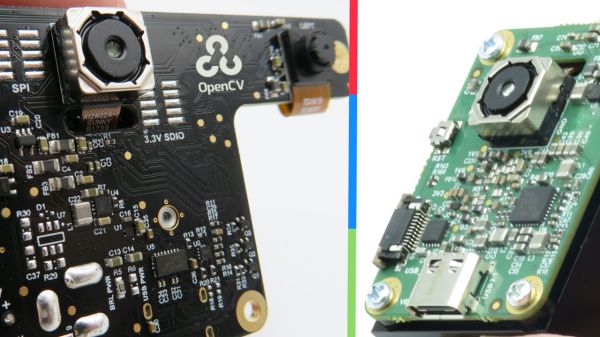

The answer is to break all those articles into their own images, but doing that manually at any sort of scale simply isn’t an option. So [John] has been working on a system that uses OpenCV to identify the columns of text and isolate them. He details the multi-step process down in his write-up, and even provides the Python code should you want to give it a spin. But the short version is that the image is converted to grayscale and the OpenCV dilate function is used to stretch the text in the Y dimension. This produces big blobs of white that can easily be picked out with findContours() and snipped into individual images.

It’s not a perfect solution, and there are still a few pitfalls. For one, the name of the paper needs to be removed from the front page before the stretching operation happens. But it’s clearly a step in the right direction, and the results certainly look very promising. Anything that makes OCR more accurate or easier to implement is a win in our book, so we’re excited to see where [John] takes this concept.