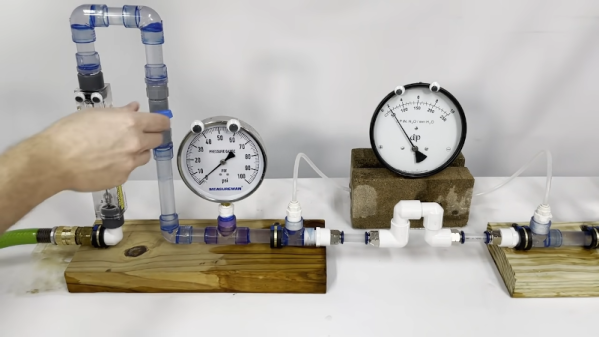

In what may be a first for watering hole attacks, we’ve now seen an attack that targeted watering holes, or at least water utilities. The way this was discovered is a bit bizarre — it was found by Dragos during an investigation into the February incident at Oldsmar, Florida. A Florida contractor that specializes in water treatment runs a WordPress site that hosted a data-gathering script. The very day that the Oldsmar facility was breached, someone from that location visited the compromised website.

You probably immediately think, as the investigators did, that the visit to the website must be related to the compromise of the Oldsmar treatment plant. The timing is too suspect for it to be a coincidence, right? That’s the thing, the compromised site was only gathering browser fingerprints, seemingly later used to disguise a botnet. The attack itself was likely carried out over Teamviewer. I will note that the primary sources on this story have named Teamviewer, but call it unconfirmed. Assuming that the breach did indeed occur over that platform, then it’s very unlikely that the website visit was a factor, which is what Dragos concluded. On the other hand, it’s easy enough to imagine a scenario where the recorded IP address from the visit led to a port scan and the discovery of a VNC or remote desktop port left open. Continue reading “This Week In Security: Watering Hole Attackception, Ransomware Trick, And More Pipeline News”