I just had my car in for an inspection and an oil change. The garage I take my car to is generally okay, they’re more honest than a stealership, but they don’t cross all their t’s and dot all their lowercase j’s. A few days after I picked up my car, low and behold, I noticed the garage didn’t do a complete oil change. The oil life indicator wasn’t reset, which means every time I turn my car on, I’ll have to press a button to clear an ominous glowing warning on my dash.

For my car, resetting the oil life indicator is a simple fix – I just need to push the button on the dash until the oil life indicator starts to blink, release, then hold it again for ten seconds. I’m at least partially competent when it comes to tech and embedded systems, but even for me, resetting the oil life sensor in my car is a bit obtuse. For the majority of the population, I can easily see this being a reason to take a car back to the shop; the mechanic either didn’t know how to do it, or didn’t know how to use Google.

The two most technically complex things I own are my car and my computer, and there is much more information available on how to fix or modify any part of my computer. If I had a desire to modify my car so I could read the value of the tire pressure monitors, instead of only being notified when one of them is too low, there’s nowhere for me to turn.

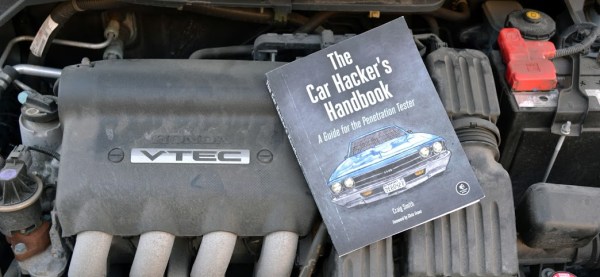

2015 was the year of car hacks, ranging from hacking ECUs to pass California emissions control standards, Google and Tesla’s self-driving cars, to hacking infotainment systems to drive reporters off the road. The lessons learned from these hacks are a hodge-podge of forum threads, conference talks, and articles scattered around the web. While you’ll never find a single volume filled with how to exploit the computers in every make and model of automobile, there is space for a reference guide on how to go about this sort of car hacking.

I was given the opportunity to review The Car Hacker’s Handbook by Craig Smith (259p, No Starch Press). Is it a guide on how to plug a dongle into my car and clear the oil life monitor the hard way? No, but you wouldn’t want that anyway. Instead, it’s a much more informative tome on penetration testing and reverse engineering, using cars as the backdrop, not the focus.

Continue reading “Books You Should Read: The Car Hacker’s Handbook” →

A few things have changed since then. You can buy Lepton modules in single quantity at

A few things have changed since then. You can buy Lepton modules in single quantity at