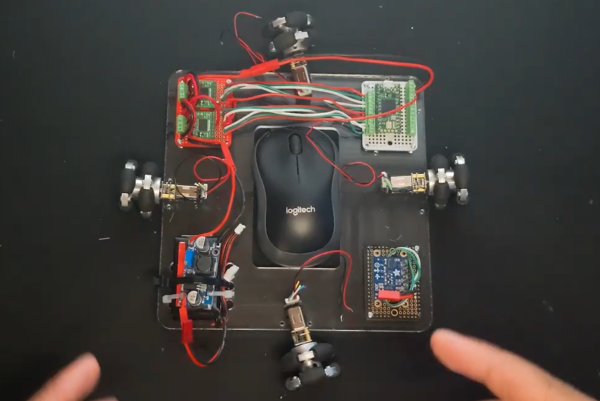

When it comes to building a mobile robot, often maneuverability is more important than outright speed. The MasterPi robot demonstrates this well, using fancy wheels to help it slide and skate in any direction needed.

Four DC gear-motors are fitted to a metal chassis, each one driving a mecanum wheel. These are special wheels with rollers fitted around their circumference at an angle that allows the robot to move in all directions and rotate in various ways depending on how each wheel is driven.

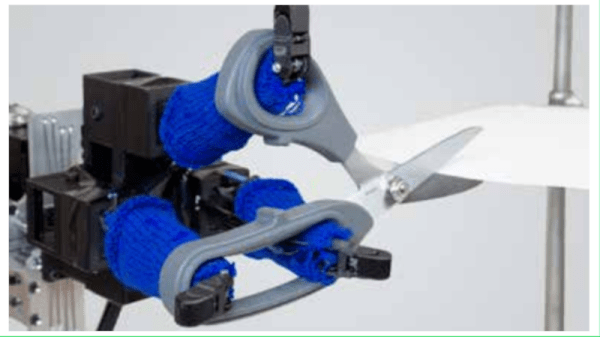

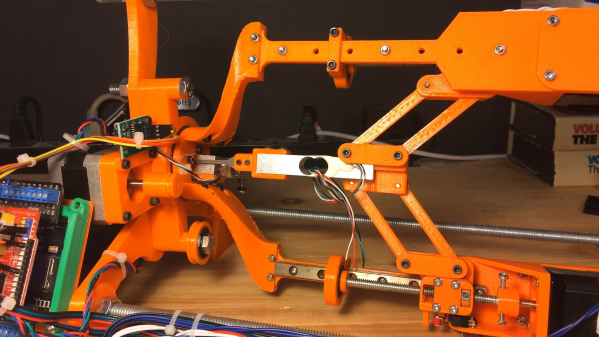

On top of this highly maneuverable chassis is placed a 5-degree-of-freedom robotic arm. The robot also gets a ultrasonic sensor for avoiding objects, as well as a camera for line-following duties. The camera also allows the robot to pick up blocks and identify their color, and it can then sort them into boxes. It’s all powered by a Raspberry Pi, running a bunch of Python code to make everything happen.

It’s a neat project that shows off just how capable a robot can be with some smart design choices and modern computing hardware on board. We’ve seen some other smart block sorters before, too. Continue reading “Hackaday Prize 2022: MasterPi Is A Capable Robot With Fancy Wheels”