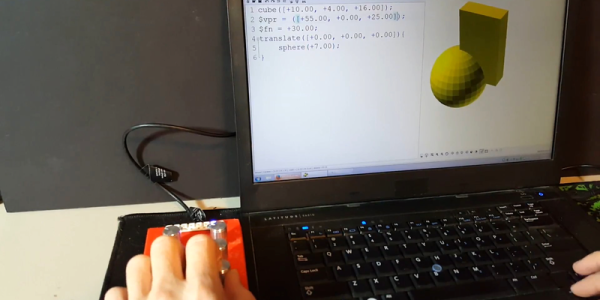

The Unity engine has been around since Apple started using Intel chips, and has made quite a splash in the gaming world. Unity allows developers to create 2D and 3D games, but there are some other interesting applications of this gaming engine as well. For example, [matthewhallberg] used it to build a robot that can map rooms in 3D.

The impetus for this project was a robotics company that used a series of robots around their business. The robots navigate using computer vision, but couldn’t map the rooms from scratch. They hired [matthewhallberg] to tackle this problem, and this robot is a preliminary result. Using the Unity engine and an iPhone, the robot can perform in one of three modes. The first is a user-controlled mode, the second is object following, and the third is 3D mapping.

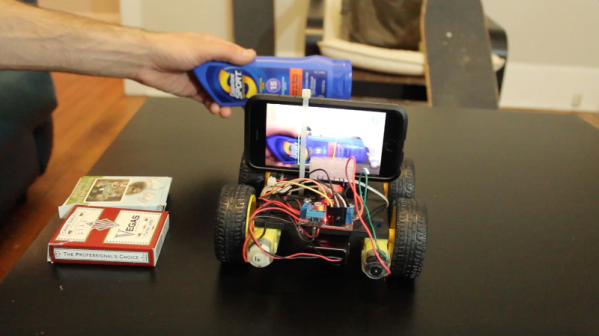

The robot seems fairly easy to construct and only carries and iPhone, a Node MCU, some motors, and a battery. Most of the computational work is done remotely, with the robot simply receiving its movement commands from another computer. There’s a lot going on here, software-wise, and a lot of toolkits and software packages to install and communicate with one another, but the video below does a good job of showing what you’ll need and how it all works together. If that’s all too much, there are other robots with a form of computer vision that can get you started into the world of computer vision and mapping.