Controlling most desktop 3D printers is as easy as sending them G-code commands over a serial connection. As you might expect, it takes a relatively quick machine to fire off the commands fast enough for a good-quality print. But what if you weren’t so picky? If speed isn’t a concern, what’s the practical limit on the type of computer you could use?

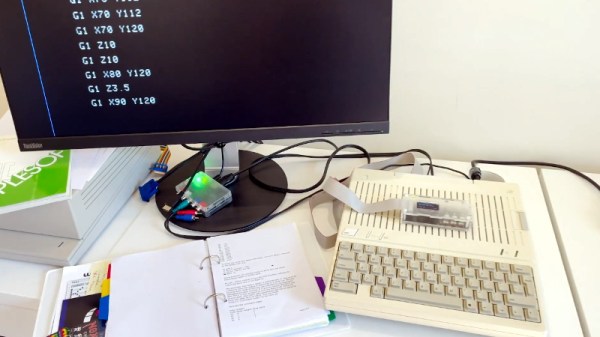

In an effort to answer that question, [Max Piantoni] set out to control his Ender 3 printer with an authentic Apple IIc. Things were made a bit easier by the fact that he really only wanted to use the printer as a 2D plotter, so he could ignore the third dimension in his code. All he needed to do was come up with a BASIC program that let him create some simple geometric artwork on the Apple and convert it into commands that could be sent out over the computer’s serial port.

Unfortunately, [Max] ran into something of a language barrier. While the Apple had no problem generating G-code the Ender’s controller would understand, both devices couldn’t agree on a data rate that worked for both of them. The 3D printer likes to zip along at 115,200 baud, while the Apple was plodding ahead at 300. Clearly, something would have to stand in as an interpreter.

The solution [Max] came up with certainly wouldn’t be our first choice, but there’s something to be said for working with what you know. He quickly whipped up a program in Unity on his Macbook that would accept incoming commands from the Apple II at 300 baud, build up a healthy buffer, and then send them off to the Ender 3. As you can see in the video after the break, this Mac-in-the-middle approach got these unlikely friends talking at last.

We’re reminded of a project from a few years back that aimed to build a fully functional 3D printer with 1980s technology. It was to be controlled by a Commodore PET from the 1980s, which also struggled to communicate quickly enough with the printer’s electronics. Bringing a modern laptop into the mix is probably cheating a bit, but at least it shows the concept is sound.

Continue reading “Apple II Talks To 3D Printer With A Little Modern Help”