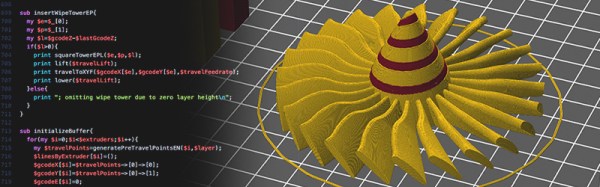

Most of our beloved tools, such as Slic3r, Cura or KISSlicer, offer scripting interfaces that help a great deal if your existing 3D printing toolchain has yet to learn how to produce decent results with a five headed thermoplastic spitting hydra. Using scripts, it’s possible to tweak the little bits it takes to get great results, inserting wipe or prime towers and purge moves on the fly, and if your setup requires it, also control additional servos and solenoids for the flamethrowers.

This article gives you a short introduction in how to post-process G-code using Perl and Slic3r. Perl Ninja skills are not required. Slic3r plays well with pretty much any scripting language that produces executables, so if you’re reluctant to use Perl, you’ll probably be able to replicate most of the steps in your favorite language.

Continue reading “3D Printering: G-Code Post Processing With Perl”