As the cost of high-resolution images sensors gets lower, and the availability of small and cheap single board computers skyrockets, we are starting to see more astrophotography projects than ever before. When you can put a $5 Raspberry Pi Zero and a decent webcam outside in a box to take autonomous pictures of the sky all night, why not give it a shot? But in doing so, many hackers are recognizing a fact well-known to traditional telescope jockeys: seeing a few stars is easy, seeing a lot of stars is another story entirely.

The problem is that stars are fairly dim; a problem compounded by the light pollution you get unless you’re out in a rural area. You can’t just brighten up the images either, as that only increases the noise in the image. A programmer always in search of a challenge, [Benedikt Bitterli] decided to take a shot at using software to improve astrophotography images. He documented the entire process, failures and all, on his blog for anyone else who might be curious about what it really takes to create the incredible images of the night sky we see in textbooks.

The problem is that stars are fairly dim; a problem compounded by the light pollution you get unless you’re out in a rural area. You can’t just brighten up the images either, as that only increases the noise in the image. A programmer always in search of a challenge, [Benedikt Bitterli] decided to take a shot at using software to improve astrophotography images. He documented the entire process, failures and all, on his blog for anyone else who might be curious about what it really takes to create the incredible images of the night sky we see in textbooks.

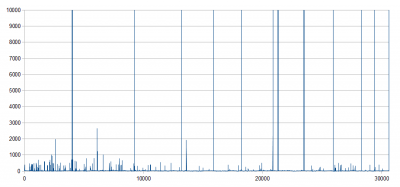

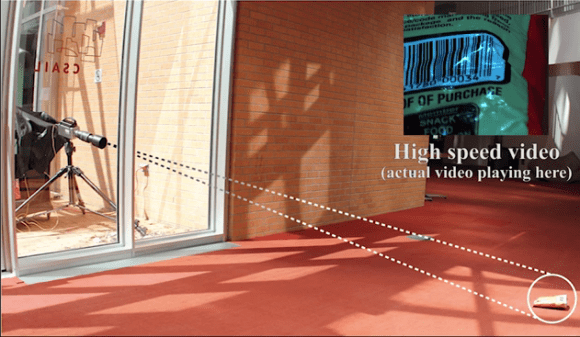

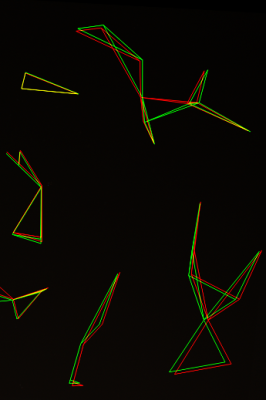

In principle it’s simple: just take a lot of pictures of the sky, stack them on top of each other, and identify which points of light are stars and which ones are noise artifacts. But of course the execution is considerably more difficult. For one thing, unless the camera was on a mount that was automatically tracking the sky, the stars will have slightly moved in each image. To help with this process, [Benedikt] used a navigational trick that humanity has relied on for millennia: mapping constellations. By comparing groupings of stars in each image, his software is able to accurately overlay each image.

But that’s only one part of the equation. In his post, [Benedikt] goes over the incredible amount of math that goes into identifying individual stars in the sea of noise you get when a digital image sensor looks into the black. You certainly don’t need to understand all the math to appreciate the final results, but it’s a fascinating read for those with an interest in computer vision concepts.

This kind of software is precisely what you want to pair with your 3D printed star tracker, or even better a Raspberry Pi sky monitoring station.

[Thanks to Helio Machado for the tip.]