Eye tracking is a useful feature in social virtual reality (VR) spaces because it really enhances presence and communication when one’s avatar has a realistic gaze. Most headsets lack this feature, but EyeTrackVR has a completely open source solution ready for anyone willing to put it together.

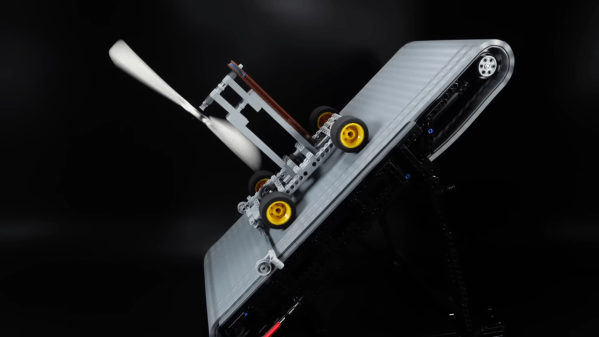

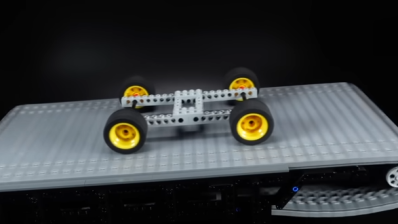

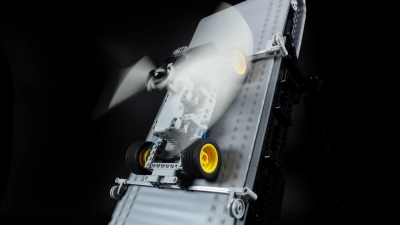

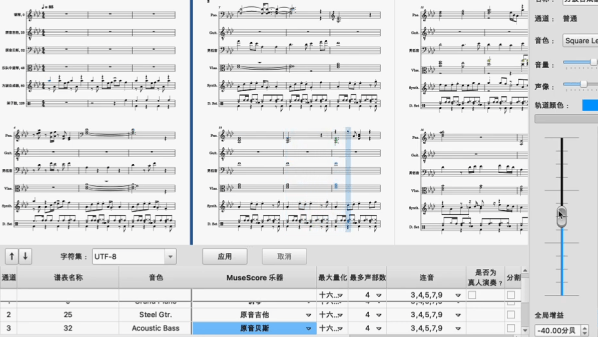

EyeTrackVR is a combination of hardware, software, and 3D printable mounts for attaching a pair of microcontroller boards, cameras, and IR LEDs to just about any existing VR headset out there. An ESP32-based board and tiny camera module watches each eyeball, and under IR illumination the pupil presents as an easily-identified round black area. Software takes care of turning the camera’s view of the pupil into a gaze direction value that can be plugged into other software.

The project is still under active development, but in its current state is perfectly suitable for creating a functional system that can integrate into a variety of existing headsets with printed mounting brackets. Interested? Check out the intro and if it sounds up your alley, dive into the build guide which spells out everything you need to know. Check out the video below for a demo of EyeTrackVR working in VRChat, along with an overview of software support.

We’ve seen headsets built to custom specs that integrate eye tracking, but even if one is repackaging an existing headset that’s a perfect opportunity to include this feature.

Continue reading “DIY Eye Tracking For VR Headsets, From A To Z”