ChatGPT has been put to all manner of silly uses since it first became available online. [Engineering After Hours] decided to see if its coding skills were any chop, and put it to work programming a circular saw. Pun intended.

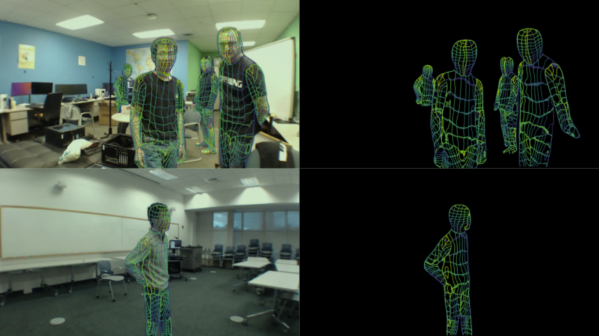

The aim was to build a line following robot armed with a circular saw to handle lawn edging tasks. The circular saw itself consists of a motor with a blade on it, and precisely no safety features. It’s mounted on the front of a small RC car with a rack and pinion to control its position. [Engineering After Hours] has some sage advice in this area: don’t try this at home.

ChatGPT was not only able to give advice on what parts to use, it was able to tell [Engineering After Hours] on how to hook everything up to an Arduino and even write the code. The AI language model even recommended a PID loop to control the position of the circular saw. Initial tests were messy, but some refinement got things impressively functional.

As a line following robot, the performance is pretty crummy. However, as a robot programmed by an AI, it does pretty okay. Obviously, it’s hard to say how much help the AI had, and how many corrections [Engineering After Hours] had to make to the code to get everything working. But the fact that this kind of project is even possible shows us just how far AI has really come.

Continue reading “Does Programming A Robot With ChatGPT Work At All?”