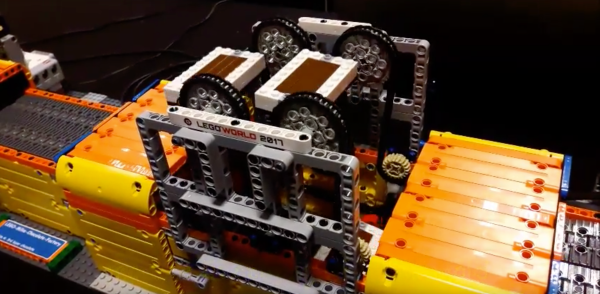

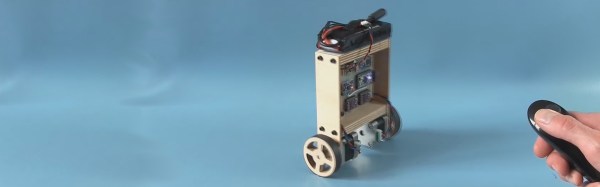

Not only has [Joop Brokking] built an easy to make balancing robot but he’s produced an excellent set of plans and software for anyone else who wants to make one too. Self-balancers are a milestone in your robot building life. They stand on two-wheels, using a PID control loop to actuate the two motors using data from some type of Inertial Measurement Unit (IMU). It sounds simple, but when starting from scratch there’s a lot of choices to be made and a lot of traps to fall into. [Joop’s] video explains the basic principles and covers the reasons he’s done things the way he has — all the advice you’d be looking for when building one of your own.

He chose steppers over cheaper DC motors because this delivers precision and avoids issues when the battery voltage drops. His software includes a program for getting a calibration value for the IMU. He also shows how to set the drive current for the stepper controllers. And he does all this clearly, and at a pace that’s neither too fast, nor too slow. His video is definitely worth checking out below.

Continue reading “Building A Self-Balancing Robot Made Easy”

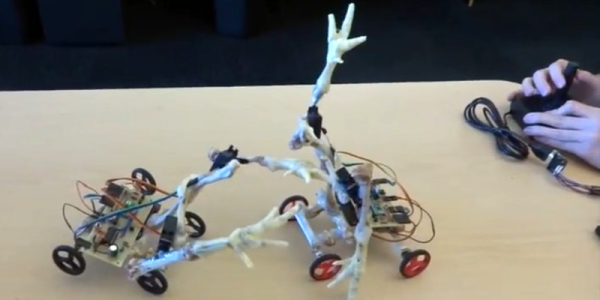

[Jeremy Cook]’s latest take on the Strandbeest,

[Jeremy Cook]’s latest take on the Strandbeest,