When you’re running a Kickstarter for a robotic arm, you had better be ready to prove how repeatable and accurate it is. [Andrew] has done just that by laser engraving 400 wooden coasters with Evezor, his SCARA arm that runs on a Raspberry Pi computer with stepper control handled by a Smoothieboard.

Evezor is quite an amazing project: a general purpose arm which can do everything from routing circuit boards to welding given the right end-effectors. If this sounds familiar, that’s because [Andrew] gave a talk about Evezor at Hackaday’s Unconference in Chicago,

One of the rewards for the Evezor Kickstarter is a simple wooden coaster. [Anderw] cut each of the wooden squares out using a table saw. He then made stacks and set to programming Evezor. The 400 coasters were each picked up and dropped into a fixture. Evezor then used a small diode laser to engrave its own logo along with an individual number. The engraved coasters were then stacked in a neat output pile.

After the programming and setup were complete, [Andrew] hit go and left the building. He did keep an eye on Evezor though. A baby monitor captured the action in low resolution. Two DSLR cameras also snapped photos of each coaster being engraved. The resulting time-lapse video can be found after the break.

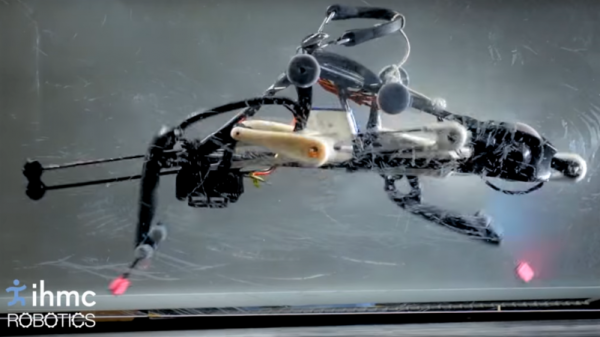

A single motor runs the entire drive chain using linkages that will look familiar to anyone who has taken an elliptical trainer apart, and there’s not a computer or sensor on board. The PER keeps its balance by what the team calls “reactive resilience”: torsion springs between the drive sprocket and cranks automatically modulate the power to both the landing leg and the swing leg to confer stability during a run. The video below shows this well if you single-frame it starting at 2:03; note the variable angles of the crank arms as the robot works through its stride.

A single motor runs the entire drive chain using linkages that will look familiar to anyone who has taken an elliptical trainer apart, and there’s not a computer or sensor on board. The PER keeps its balance by what the team calls “reactive resilience”: torsion springs between the drive sprocket and cranks automatically modulate the power to both the landing leg and the swing leg to confer stability during a run. The video below shows this well if you single-frame it starting at 2:03; note the variable angles of the crank arms as the robot works through its stride.