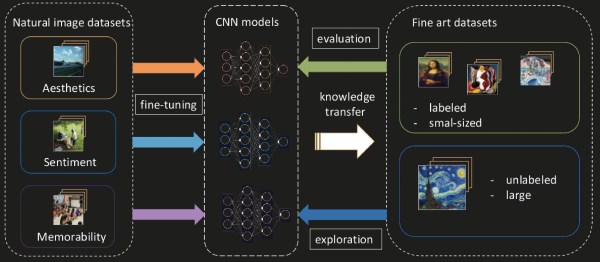

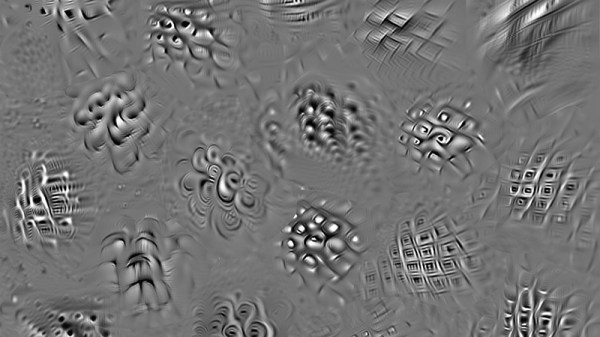

Even though machine learning AKA ‘deep learning’ / ‘artificial intelligence’ has been around for several decades now, it’s only recently that computing power has become fast enough to do anything useful with the science.

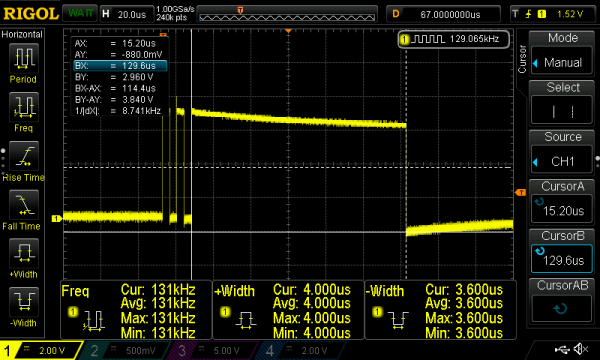

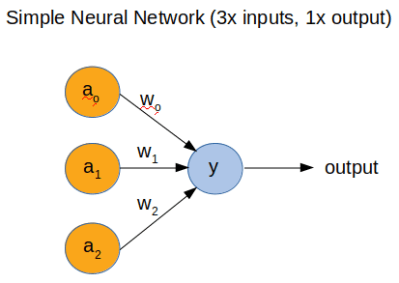

However, to fully understand how a neural network (NN) works, [Dimitris Tassopoulos] has stripped the concept down to pretty much the simplest example possible – a 3 input, 1 output network – and run inference on a number of MCUs, including the humble Arduino Uno. Miraculously, the Uno processed the network in an impressively fast prediction time of 114.4 μsec!

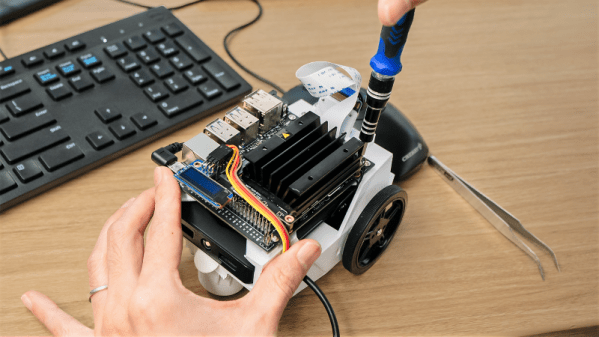

Whilst we did not test the code on an MCU, we just happened to have Jupyter Notebook installed so ran the same code on a Raspberry Pi directly from [Dimitris’s] bitbucket repo.

He explains in the project pages that now that the hype about AI has died down a bit that it’s the right time for engineers to get into the nitty-gritty of the theory and start using some of the ‘tools’ such as Keras, which have now matured into something fairly useful.

In part 2 of the project, we get to see the guts of a more complicated NN with 3-inputs, a hidden layer with 32 nodes and 1-output, which runs on an Uno at a much slower speed of 5600 μsec.

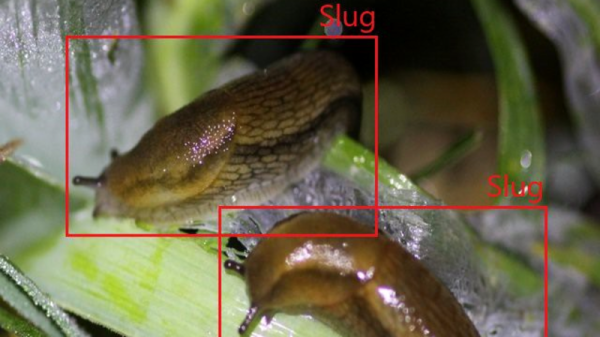

This exploration of ML in the embedded world is NOT ‘high level’ research stuff that tends to be inaccessible and hard to understand. We have covered Machine Learning On Tiny Platforms Like Raspberry Pi And Arduino before, but not with such an easy and thoroughly practical example.