What is this dystopia coming to when one of the world’s largest tech companies can’t find a way to sufficiently monetize a nearly endless stream of personal data coming from its army of high-tech privacy-invading robots? To the surprise of almost nobody, Amazon is rolling out a paid tier to their Alexa service in an attempt to backfill the $25 billion hole the smart devices helped dig over the last few years. The business model was supposed to be simple: insinuate an always-on listening device into customers’ lives to make it as easy as possible for them to instantly gratify their need for the widgets and whatsits that Amazon is uniquely poised to deliver, collecting as much metadata along the way as possible; multiple revenue streams — what could go wrong? Apparently a lot, because the only thing people didn’t do with Alexa was order stuff. Now Amazon is reportedly seeking an additional $10 a month for the improved AI version of Alexa, which will be on top of the ever-expanding Amazon Prime membership fee, currently at an eye-watering $139 per year. Whether customers bite or not remains to be seen, but we think there might be a glut of Echo devices on the second-hand market in the near future. We hate to say we told you so, but — ah, who are we kidding? We love to say we told you so.

boston dynamics41 Articles

Hackaday Links: April 21, 2024

Do humanoid robots dream of electric retirement? Who knows, but maybe we can ask Boston Dynamics’ Atlas HD, which was officially retired this week. The humanoid robot, notable for its warehouse Parkour and sweet dance moves, never went into production, at least not as far as we know. Atlas always seemed like it was intended to be an R&D platform, to see what was possible for a humanoid robot, and in that way it had a heck of a career. But it’s probably a good thing that fleets of Atlas robots aren’t wandering around shop floors or serving drinks, especially given the number of hydraulic blowouts the robot suffered. That also seems to be one of the lessons Boston Dynamics learned, since Atlas’ younger, nimbler replacement is said to be all-electric. From the thumbnail, the new kid already seems pretty scarred and battered, so here’s hoping we get to see some all-electric robot fails soon.

Hackaday Links: April 16, 2023

The dystopian future you’ve been expecting is here now, at least if you live in New York City, which unveiled a trio of technology solutions to the city’s crime woes this week. Surprisingly, the least terrifying one is “DigiDog,” which seems to be more or less an off-the-shelf Spot robot from Boston Dynamics. DigiDog’s job is to de-escalate hostage negotiation situations, and unarmed though it may be, we suspect that the mission will fail spectacularly if either the hostage or hostage-taker has seen Black Mirror. Also likely to terrify the public is the totally-not-a-Dalek-looking K5 Autonomous Security Robot, which is apparently already wandering around Times Square using AI and other buzzwords to snitch on people. And finally, there’s StarChase, which is based on an AR-15 lower receiver and shoots GPS trackers that stick to cars so they can be tracked remotely. We’re not sure about that last one either; besides the fact that it looks like a grenade launcher, the GPS tracker isn’t exactly covert. Plus it’s only attached with adhesive, so it seems easy enough to pop it off the target vehicle and throw it in a sewer, or even attach it to another car.

Need A Snack From Across Town? Send Spot!

[Dave Niewinski] clearly knows a thing or two about robots, judging from his YouTube channel. Usually the projects involve robot arms mounted on some sort of wheeled platform, but this time it’s the tune of some pretty famous yellow robot legs, in the shape of spot from Boston Dynamics. The premise is simple — tell the robot what snacks you want, entirely by voice command, and off he goes to fetch. But, we’re not talking about navigating to the fridge in the same room. We’re talking about trotting out the front door, down the street and crossing roads to visit favorite restaurant. Spot will order the snacks and bring them back, fully autonomously.

There are multiple things going here, all of which are pretty big computational tasks. Firstly, there is no cloud-based voice control, ala Google voice or Alexa. The robot works on the premise of full autonomy, which means no internet connectivity for any aspect. All voice recognition, voice-to-text, and speech synthesis are performed locally using the NVIDIA Riva GPU-based AI speech SDK, running on the local NVIDIA Jetson AGX Orin carried on Spot’s back. A front-facing webcam supplies the audio feed for this. The voice recognition application listens for the wake phrase, then turns the snack order into text, for later replay when it gets to the destination. Navigation is taken care of with a Microstrain RTK GNSS module, which has all the needed robustness, such as dual antennas, and inertial fallback for those regions with a spotty signal. Navigation is no use out in the real world on its own, which is where Spot’s depth sensor cameras come in. These enable local obstacle avoidance, as per the usual spot behavior we’ve all seen before. But what about crossing the road without getting tens of thousands of dollars of someone else’s hardware crushed by a passing truck? Spot’s onboard streaming cameras are fed into the NVIDIA dash cam net AI platform which enables real-time recognition of moving obstacles such as cars, humans and anything else that might be wandering around and get in the way. All in all a cool project showing the future potential of AI in robotics for important tasks, like fetching me a beer when I most need it, even if it comes from the local corner shop.

We love robots around here. Robots can mow your lawn, navigate inside your house with a little help from invisible QR Codes, even help out with growing your food. The robot-assisted future long promised, may now be looking more like the present.

Continue reading “Need A Snack From Across Town? Send Spot!”

Robot Dogs Hack Chat

Join us on Wednesday, September 29 at noon Pacific for the Robot Dogs Hack Chat with Afreez Gan!

Thanks to the efforts of a couple of large companies, many devoted hobbyists, and some dystopian science fiction, robot dogs have firmly entered the zeitgeist of our “living in the future” world. The quadrupedal platform, with its agility and low center of gravity, is perfect for navigating in the real world, where the terrain is rarely even and unexpected obstacles are to be expected.

The robot dog has been successful enough that there are commercially available — if prohibitively priced — dogs on the market, doing everything from inspecting factory processes and off-shore oil platforms to dancing for their dinner. All the publicity around robot dogs has fueled a crush of DIY and open-source versions, so that hobbyists can take advantage of what the platform has to offer. And as a result, the design of these dogs has converged somewhat, with elements that provide a common design language for these electromechanical pets.

Afreez Gan has been exploring the robot dog space for a while now, and his MiniPupper is generating some interest. He’ll stop by the Hack Chat to talk about MiniPupper specifically and the quadruped platform in general. We’ll talk about what it takes to build your own robot dog, what you can do with one once you’ve built it, and how these bots can play a part in STEM education. Along the way, we’ll touch on ROS, lidar, machine vision with OpenCV, and pretty much anything involved in the care and feeding of your newest electronic pal.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, September 29 at 12:00 PM Pacific time. If time zones have you tied up, we have a handy time zone converter.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, September 29 at 12:00 PM Pacific time. If time zones have you tied up, we have a handy time zone converter.

Boston Dynamics Atlas Dynamic Duo Tackles Obstacle Course

Historically, the capabilities of real world humanoid robots have trailed far behind their TV and movie counterparts. But roboticists kept pushing state of the art forward, and Boston Dynamics just shared a progress report: their research platform Atlas can now complete a two-robot parkour routine.

Watching the minute-long routine on YouTube (embedded after the break) shows movements more demanding than their dance to the song “Do You Love Me?“ And according to Boston Dynamics, this new capability is actually even more impressive than it looks. Unlike earlier demonstrations, this routine used fewer preprogrammed motions that made up earlier dance performances. Atlas now makes more use of its onboard sensors to perceive its environment, and more of its onboard computing power to decide how to best move through the world on a case-by-case basis. It also needed to string individual actions together in a continuous sequence, something it had trouble doing earlier.

Such advances are hard to tell from a robot demonstration video, which are frequently edited and curated to show highlighted success and skip all the (many, many) fails along the way. Certainly Boston Dynamics did so themselves before, but this time it is accompanied by almost six minutes worth of behind-the-scenes footage. (Also after the break.) We see the robot stumbling as it learned, and the humans working to put them back on their feet.

Humanoid robot evolution has not always gone smoothly (sometimes entertainingly so) but Atlas is leaps and bounds over its predecessors like Honda Asimo. Such research finds its way to less humanoid looking robots like the Stretch. And who knows, maybe one day real robots will be like their TV and movie counterparts that have, for so long, been played by humans inside costumes.

Continue reading “Boston Dynamics Atlas Dynamic Duo Tackles Obstacle Course”

Hackaday Links: May 9, 2021

Well, that de-escalated quickly. It seems like no sooner than a paper was announced that purported to find photographic evidence of fungi growing on Mars, that the planetary science and exobiology community came down on it like a ton of bricks. As well they should — extraordinary claims require extraordinary evidence, and while the photos that were taken by Curiosity and Opportunity sure seem to show something that looks a lot like a terrestrial puffball fungus, there are a lot of other, more mundane ways to explain these formations. Add to the fact that the lead author of the Martian mushroom paper is a known crackpot who once sued NASA for running over fungi instead of investigating them; the putative shrooms later turned out to be rocks, of course. Luckily, we have a geobiology lab wandering around on Mars right now, so if there is or was life on Mars, we’ll probably find out about it. You know, with evidence.

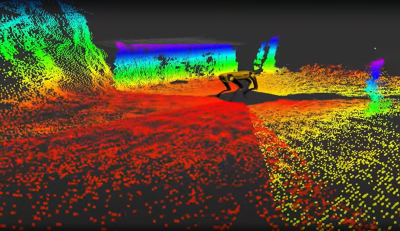

If you’re a fan of dystopic visions of a future where bloodthirsty robots relentlessly hunt down the last few surviving humans, the news that the New York Police Department decided to stop using their “DigiDog” robot will be a bit of a downer. The move stems from outrage generated by politicians and citizens alike, who dreamt up all sorts of reasons why the NYPD shouldn’t be using this tool. And use it they apparently did — the original Boston Dynamics yellow showing through the many scuffs and dings in the NYPD blue paint job means this little critter has seen some stuff since it hit the streets in late 2020. And to think — that robot dog was only a few weeks away from filing its retirement papers.

Attention, Commodore fans based in Europe: the Commodore Users Europe event is coming soon. June 12, to be precise. As has become traditional, the event is virtual, but it’s free and they’re looking for presenters.

In a bid to continue the grand Big Tech tradition of knowing what’s best for everyone, Microsoft just announced that Calibri would no longer be the default font in Office products. And here’s the fun part: we all get to decide what the new default font will be, at least ostensibly. The font wonks at Microsoft have created five new fonts, and you can vote for your favorite on social media. The font designers all wax eloquent on their candidates, and there are somewhat stylized examples of each new font, but what’s lacking is a simple way to judge what each font would actually look like on a page of English text. Whatever happened to “The quick brown fox” or even a little bit of “Lorem ipsum”?

And finally, why are German ambulances — and apparently, German medics — covered in QR codes? Apparently, it’s a way to fight back against digital rubberneckers. The video below is in German, but the gist is clear: people love to stop and take pictures of accident scenes, and smartphones have made this worse, to the point that emergency personnel have trouble getting through to give aid. And that’s not to mention the invasion of privacy; very few accident victims are really at their best at that moment, and taking pictures of them is beyond rude. Oh, and it’s illegal, punishable by up to two years in jail. The idea with the QR codes is to pop up a website with a warning to the rubbernecker. Our German is a bit rusty, but we’re pretty sure that translates to, “Hey idiot, get back in your frigging car!” Feel free to correct us on that.

[Editor’s note: “Stop. Rubbernecking kills”.]