Over the last few years, the state of the art in handheld routers has been tucked away in the back of our minds. It was at SIGGRAPH in 2012 and we caught up to it at Makerfair last year. Now, it’s getting ready for production.

Originally called Taktia, the Shaper router looks a lot like a normal, handheld router. This router is smart, though, with the ability to look at a work piece marked with a tape designed for computer vision and slightly reposition the cutter in response to how the user is moving it. A simple description doesn’t do this tool justice, so check out the video the Shaper team recently uploaded.

With the user moving the Shaper router over a work piece and motors moving the cutter head, this tool is able to make precision cuts – wooden gears and outlines of the United States – quickly, easily, and accurately. Cutting any shape is as easy as loading a file into Shaper, calling that file up on a touch screen display, and turning on the cutter. Move the router around the table, and the Shaper takes care of the rest.

Accuracy, at least in earlier versions, is said to be on the order of a hundredth of an inch. That’s good enough for wood, like this very interesting bit of joinery that would be pretty hard with traditional tools. Video below.

Thanks [martin] for the tip.

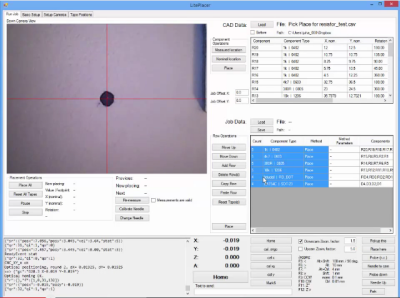

While some other DIY pick and place setups we’ve featured use fairly exotic setups

While some other DIY pick and place setups we’ve featured use fairly exotic setups