If you have ever used an eraser to correct a piece of pencil work, have you ever considered how much of an innovation it must have seemed when the first erasers were invented? It might seem odd to consider a centuries-old piece of stationery here on Hackaday, but there is a parallel in our own time. Digital image manipulation is such a part of everyday life these days as to have become run-of-the-mill for anyone with a mobile phone and the right app, but it’s easy to forget how recent an innovation it really is. Only a few decades ago your only chance of manipulating a photograph was to spend a lot of time in a darkroom with a photographic developer of exceptional skill, now children who have never known a world in which it wasn’t possible can manipulate their selfies with a few deft touches of the screen.

[Steve Greenfield] pointed us at a detailed description of the business he ran in the 1990s, offering digital and composite photography using an upgraded Amiga 3000. It caught our attention as a snapshot of the state of digital image manipulation when these things still lay at the bleeding edge of what was possible.

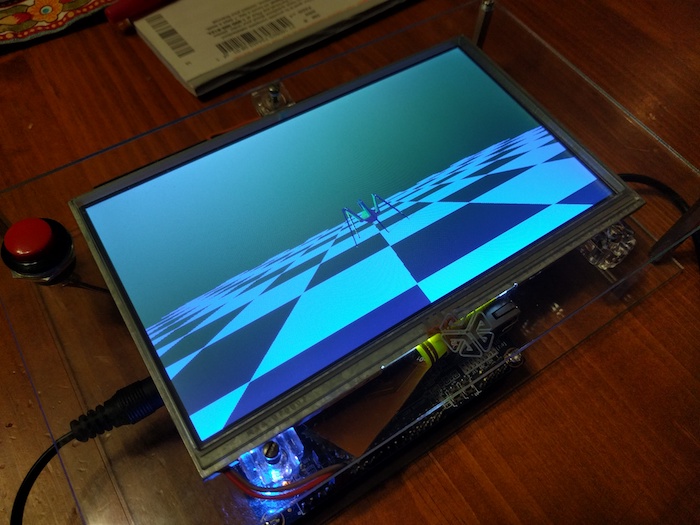

His 3000 was highly customised from the stock machine. It featured a Phase 5 68060 accelerator board, a Cybervision 64 graphics card, a then-unimaginably-huge 128MB RAM, and an array of gigabyte-plus Fast SCSI drives. To that he had attached a Polaroid SCSI digital camera with a then-impressive 800×600 pixel resolution. The Polaroid had no Amiga drivers, so he ran the Shapeshifter Mac emulator to capture images under the MacOS of the day. The fastest 68000-series Mac only had a 68040 which the early PowerPC Macs could only emulate, so he writes that his 68060-equipped Amiga ran the Mac software faster than any Mac at the time.

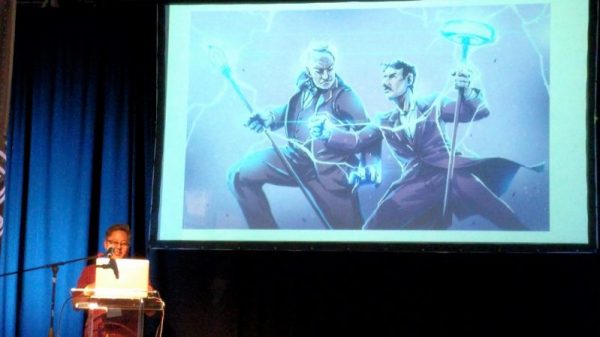

His stock-in-trade was attending sci-fi conventions and giving costumed attendees pictures with custom backgrounds, something of a doddle on such a souped-up Amiga. He writes of the shock of some Microsoft employees on discovering a 60MHz computer could run rings round their several-hundred-MHz Pentiums running Windows 95.

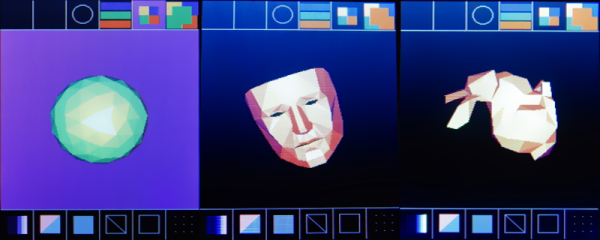

His business is long gone, but its website remains as a time capsule of the state of digital imagery two decades ago. The sample images are very much of their time, but for those used to today’s slicker presentation it’s worth remembering that all of this was very new indeed.

In a world dominated by a monoculture of Intel based desktop computers it’s interesting to look back to a time when there was a genuine array of choices and some of them could really compete. As a consumer at the start of the 1990s you could buy a PC or a Mac, but Commodore’s Amiga, Atari’s ST, and (if you were British) Acorn’s ARM-based Archimedes all offered alternatives with similar performance and their own special abilities. Each of those machines still has its diehard enthusiasts who will fill you in with a lengthy tale of what-if stories of greatness denied, but maybe such casualties are best viewed as an essential part of the evolutionary process. Perhaps the famous Amiga easter egg says it best, “We made Amiga … ”

Here at Hackaday we’ve covered quite a few Amiga topics over the years, including another look at the Amiga graphics world. It’s still a scene inspiring hardware hackers, for example with this FPGA-based Amiga GPU.

Amiga 3000 image: By [Joe Smith] [Public domain], via Wikimedia Commons.