Fish are popular animals to keep as pets, and for good reason. They’re relatively low maintenance, relaxing to watch, and have a high aesthetic appeal. But for all their upsides, they aren’t quite as companionable as a dog or a cat. Although some fish can do limited walking or flying, these aren’t generally kept as pets and would still need considerable help navigating the terrestrial world. To that end, [Everything is Hacked] built a fish tank that allows his fish to move around on their own. We presume he’s heard the old joke about two fish in a tank. One says, “Do you know how to drive this thing?”

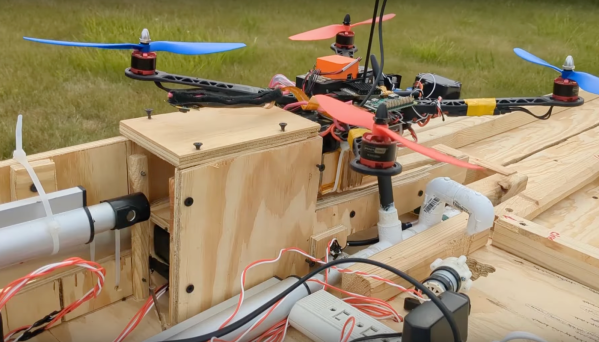

The first prototype of this “fish tank” is actually built on a tracked vehicle with differential steering, on which the fish tank would sit. But after building a basic, driveable machine, the realities of fish ownership set in. The fish with the smallest tank needs is a betta fish, but even that sort of fish needs much more space than would easily fit on a robotics platform. So [Everything is Hacked] set up a complete ecosystem for his new pet, making the passenger vehicle a secondary tank.

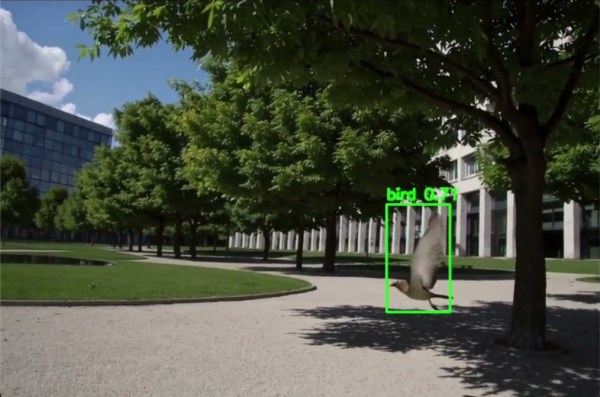

The new fish’s name is [Carrot], named after the carrots that [Everything is Hacked] used to test the computer vision system that would track the fish’s movements and use them to control the mobile fish tank. There was some configuration needed to ensure that when this feisty fish swam in circles, the tank didn’t spin around uncontrollably, but eventually he was able to get it working in an “arena” where [Carrot] could drive towards some favorite items he might like to interact with. Mostly, though, he drove his tank to investigate the other fish in the area.

The ultimate goal was for [Everything is Hacked] to take his fish on a walk, though, so he set about training [Carrot] to respond to visual cues and swim towards them. In theory, this would have allowed him to be followed by his fish tank, but a test at a local grocery did not go as smoothly as hoped. Still, it’s an interesting project that pushes the boundaries of pet ownership much like other fish-driving projects we’ve seen.