Currently, if you want to use the Autopilot or Self-Driving modes on a Tesla vehicle you need to keep your hands on the wheel at all times. That’s because, ultimately, the human driver is still the responsible party. Tesla is adamant about the fact that functions which allow the car to steer itself within a lane, avoid obstacles, and intelligently adjust its speed to match traffic all constitute a driver assistance system. If somebody figures out how to fool the wheel sensor and take a nap while their shiny new electric car is hurtling down the freeway, they want no part of it.

So it makes sense that the company’s official line regarding the driver-facing camera in the Model 3 and Model Y is that it’s there to record what the driver was doing in the seconds leading up to an impact. As explained in the release notes of the June 2020 firmware update, Tesla owners can opt-in to providing this data:

Help Tesla continue to develop safer vehicles by sharing camera data from your vehicle. This update will allow you to enable the built-in cabin camera above the rearview mirror. If enabled, Tesla will automatically capture images and a short video clip just prior to a collision or safety event to help engineers develop safety features and enhancements in the future.

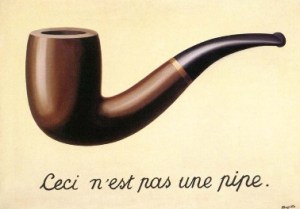

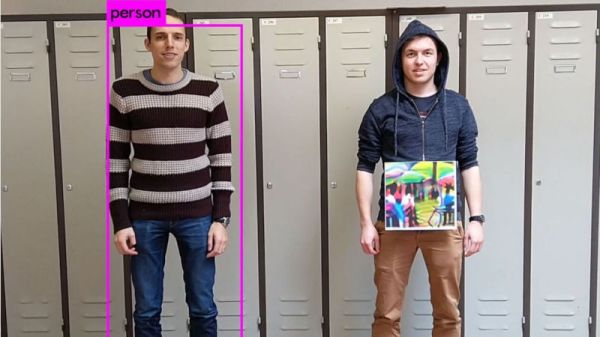

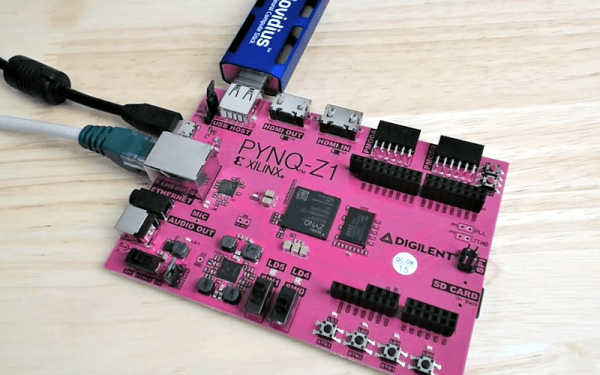

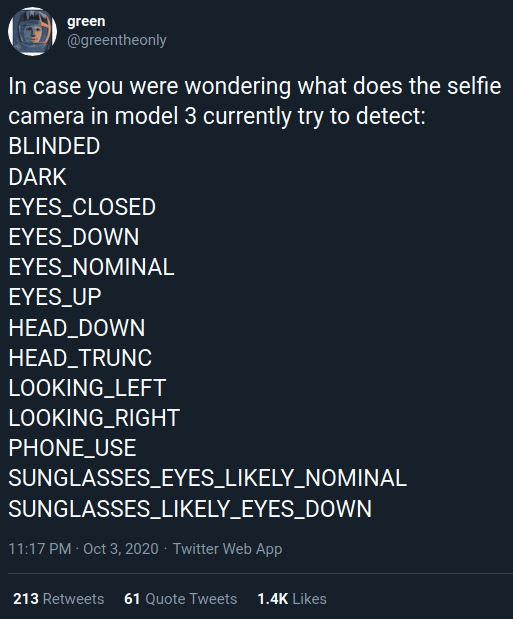

But [green], who’s spent the last several years poking and prodding at the Tesla’s firmware and self-driving capabilities, recently found some compelling hints that there’s more to the story. As part of the vehicle’s image recognition system, which usually is tasked with picking up other vehicles or pedestrians, they found several interesting classes that don’t seem necessary given the official explanation of what the cabin camera is doing.

But [green], who’s spent the last several years poking and prodding at the Tesla’s firmware and self-driving capabilities, recently found some compelling hints that there’s more to the story. As part of the vehicle’s image recognition system, which usually is tasked with picking up other vehicles or pedestrians, they found several interesting classes that don’t seem necessary given the official explanation of what the cabin camera is doing.

If all Tesla wanted was a few seconds of video uploaded to their offices each time one of their vehicles got into an accident, they wouldn’t need to be running image recognition configured to detect distracted drivers against it in real-time. While you could make the argument that this data would be useful to them, there would still be no reason to do it in the vehicle when it could be analyzed as part of the crash investigation. It seems far more likely that Tesla is laying the groundwork for a system that could give the vehicle another way of determining if the driver is paying attention.

Continue reading “Firmware Hints That Tesla’s Driver Camera Is Watching”