Ever wished for some robotic enhancements for your next nerf war? Well, it’s time to dig through the parts bin and build yourself a nerf gun with aimbot built right in, courtesy of [3Dprintedlife]. (Video, embedded below.)

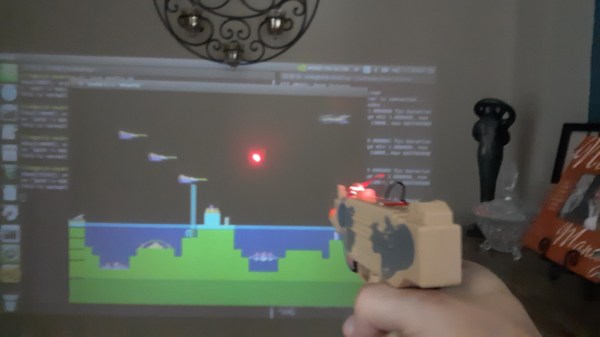

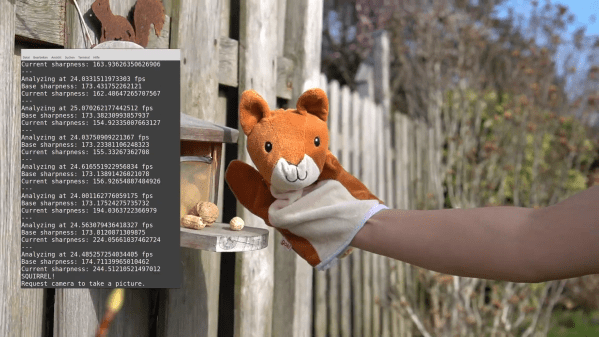

The gun started with a design borrowed from [Captain Slug]’s awesome catalog of open source nerf guns. [3Dprintedlife] modified the design to include a two-axis gimbal between the lower and the upper, driven by a pair of stepper motors via an Arduino. For auto-aim, a camera module attached to a Raspberry Pi running OpenCV was added. When the user half-pressed the trigger, OpenCV will start tracking whatever was at the center of the frame and actively adjust the gimbal to keep the gun aimed at the object until the user fires. The trigger mechanism consists of a pair of microswitches that activate a servo to release the sear. It is also capable of tracking a moving target or any face that comes into view.

We think this is a really fun project, with a lot of things that can be learned in the process. Mount it on a remote control tank and you’d be able to wage some intense battles in your backyard. All the files are available on GitHub.

You are never too old for a good old nerf battle. Whether you want to be a sniper, a machine gunner, or a heavy weapons specialist, there’s a weapon to build for every role.