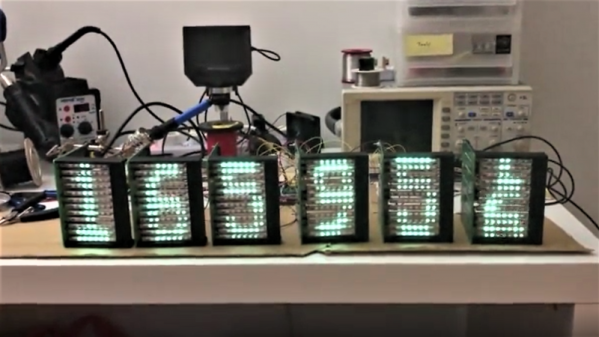

If you need to add a small display to your project, you’re not going to do much better than a tiny OLED display. These tiny display are black and white, usually found in resolutions of 128×64 or some other divisible-by-two value, they’re driven over I2C, the libraries are readily available, and they’re cheap. You can’t do much better for displaying a few numbers and text than an I2C OLED. There’s a problem, though: OLEDs burn out, or burn in, depending on how you define it. What’s the lifetime of these OLEDs? That’s exactly what [Electronics In Focus] is testing (YouTube, in Russian, so click the closed captioning button).

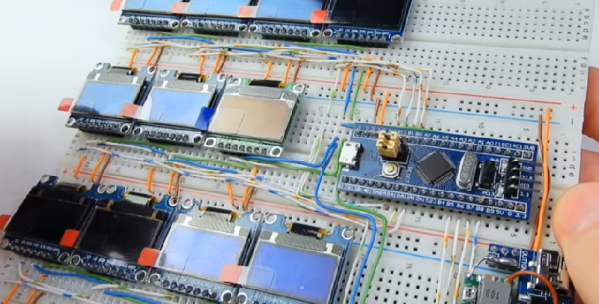

The experimental setup for this is eleven OLED displays with 128×64 pixels with an SSD1306 controller, all driven by an STM32 over I2C. Everything’s on a breadboard, and the actual display is sixteen blocks, each lit one after another with a one-second display in between. This is to test gradually increasing levels of burnout, and from a surface-level analysis, this is a pretty good way to see if OLED pixels burn out.

After 378 days of testing, this test was stopped after there were no failed displays. This comes with a caveat: after a year of endurance testing, there were a few burnt out pixels. correlating with how often these pixels were on. The solution to this problem would be to occasionally ‘jiggle’ the displayed text around the screen, turn the display off when no one is looking at it, or alternatively write a screen saver for OLEDs. That last bit has already been done, and here are the flying toasters to prove it. This is an interesting experiment, and although that weird project you’re working on probably won’t ping an OLED for a year of continuous operation, it’s still something to think about. Video below.