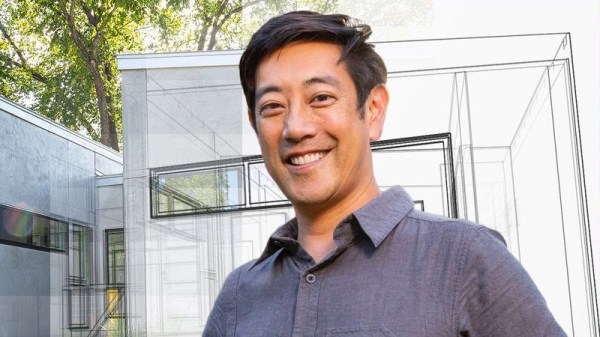

We awake this morning to sad news of the premature passing of Grant Imahara at the age of 49 due to a brain aneurysm. Grant was best known for his role on the wildly popular Mythbusters television show on which he starred and built test apparatus for seasons three through twelve. He landed this role because he was a badass hardware hacker as much as he was an on-camera personality.

Grant received his degree in electrical engineering from USC in 1993 and landed a job with Lucasfilm, finding his way onto the Industrial Light and Magic team to work on blockbuster films like the Star Wars prequels (R2-D2 among other practical effects) and sequels to Terminator and The Matrix. Joining the Mythbusters team in 2005 was something of a move to rapid prototyping. Each of the 22-minute episodes operated on a 10-day build and a film cycle in which Grant was often tasked with designing and fabricating test rigs for repeatable testing with tightly controlled parameters.

After leaving the show, Grant pursued several acting opportunities, including the Kickstarter funded web series Star Trek Continues which we reported on back in 2013. But he did return to the myth busting genre with one season of The White Rabbit Project on Netflix. One of the most genuinely geeky appearances Grant made was on an early season of Battlebots where his robot ‘Deadblow’ sported a wicked spiked hammer. Video of his appearance in the quarter-finals is like a time-capsule in hacker history and guaranteed to bring a smile to your face.

Grant Imahara’s legacy is his advocacy of science and engineering. He was a role model who week after week proved that questioning how things work, and testing a hypothesis to find answers is both possible and awesome. At times he did so by celebrating destructive force in the machines and apparatus he built. But it was always done with observance of safety precautions and with a purpose in mind (well, perhaps with the exception of the Battlebots). His message was that robots and engineering are cool, that being a geek means you know what the heck you’re doing, and that we can entertain ourselves through creating. His message lives on through countless kids who have grown up to join engineering teams throughout the world.

Grant was the headliner at the first Hackaday Superconference in San Francisco back in 2015. I’ve embedded the fireside chat below where you can hear in his own words what inspired Grant, along with numerous stories from throughout his life.

Continue reading “Roboticist Grant Imahara Of Mythbusters Fame Dies Of Aneurysm At Age 49”