The really nice thing about doing something the “wrong” way is that there’s just so much variety! If you’re doing something the right way, the fastest way, or the optimal way, well, there’s just one way. But if you’re going to do it wrong, you’ve got a lot more design room.

Case in point: esoteric programming languages. The variety is stunning. There are languages intended to be unreadable, or to sound like Shakespearean sonnets, or cooking recipes, or hair-rock ballads. Some of the earliest esoteric languages were just jokes: compilations of all of the hassles of “real” programming languages of the time, but yet made to function. Some represent instructions as a grid of colored pixels. Some represent the code in a fashion that’s tantamount to encryption, and the only way to program them is by brute forcing the code space. Others, including the notorious Brainf*ck are actually not half as bad as their rap — it’s a very direct implementation of a Turing machine.

Case in point: esoteric programming languages. The variety is stunning. There are languages intended to be unreadable, or to sound like Shakespearean sonnets, or cooking recipes, or hair-rock ballads. Some of the earliest esoteric languages were just jokes: compilations of all of the hassles of “real” programming languages of the time, but yet made to function. Some represent instructions as a grid of colored pixels. Some represent the code in a fashion that’s tantamount to encryption, and the only way to program them is by brute forcing the code space. Others, including the notorious Brainf*ck are actually not half as bad as their rap — it’s a very direct implementation of a Turing machine.

So you have a set of languages that are designed to be maximally unlike each other, or traditional programming languages, and yet still be able to do the work of instructing a computer to do what you want. And if you squint your eyes just right, and look at as many of them all together as you can, what emerges out of this blobby intersection of oddball languages is the essence of computing. Each language tries to be as wrong as possible, so what they have in common can only be the unavoidable core of coding.

While it might be interesting to compare an contrast Java and C++, or Python, nearly every serious programming language has so much in common that it’s just not as instructive. They are all doing it mostly right, and that means that they’re mostly about the human factors. Yawn. To really figure out what’s fundamental to computing, you have to get it wrong.

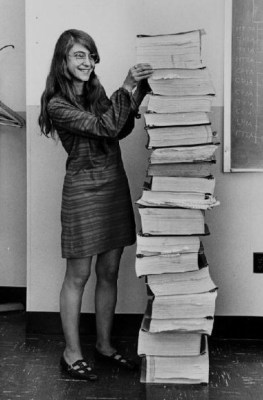

Or rather full handed. Every computer language that I could find had comments from the beginning. FORTRAN had comments, marked by a “C” as the first character in a line. APL had comments, marked by the bizarro rune ⍝. Even the custom language written for the

Or rather full handed. Every computer language that I could find had comments from the beginning. FORTRAN had comments, marked by a “C” as the first character in a line. APL had comments, marked by the bizarro rune ⍝. Even the custom language written for the