When you’re operating a machine that’s powerful enough to tear a solid metal block to shards, it pays to be attentive to details. The angular momentum of the spindle of a modern CNC machine can be trouble if it gets unleashed the wrong way, which is why generations of machinists have developed an ear for the telltale sign of impending doom: chatter.

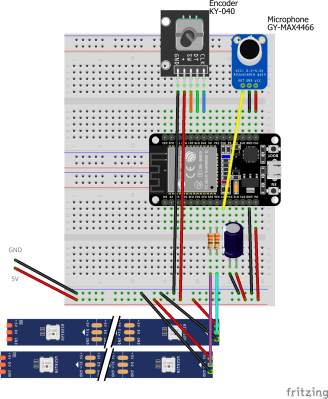

To help develop that ear, [Zachary Tong] did a spectral analysis of the sounds of his new CNC machine during its “first chip” outing. The benchtop machine is no slouch – an Avid Pro 2436 with a 3 hp S30C tool-changing spindle. But like any benchtop machine, it lacks the sheer mass needed to reduce vibration, and tool chatter can be a problem.

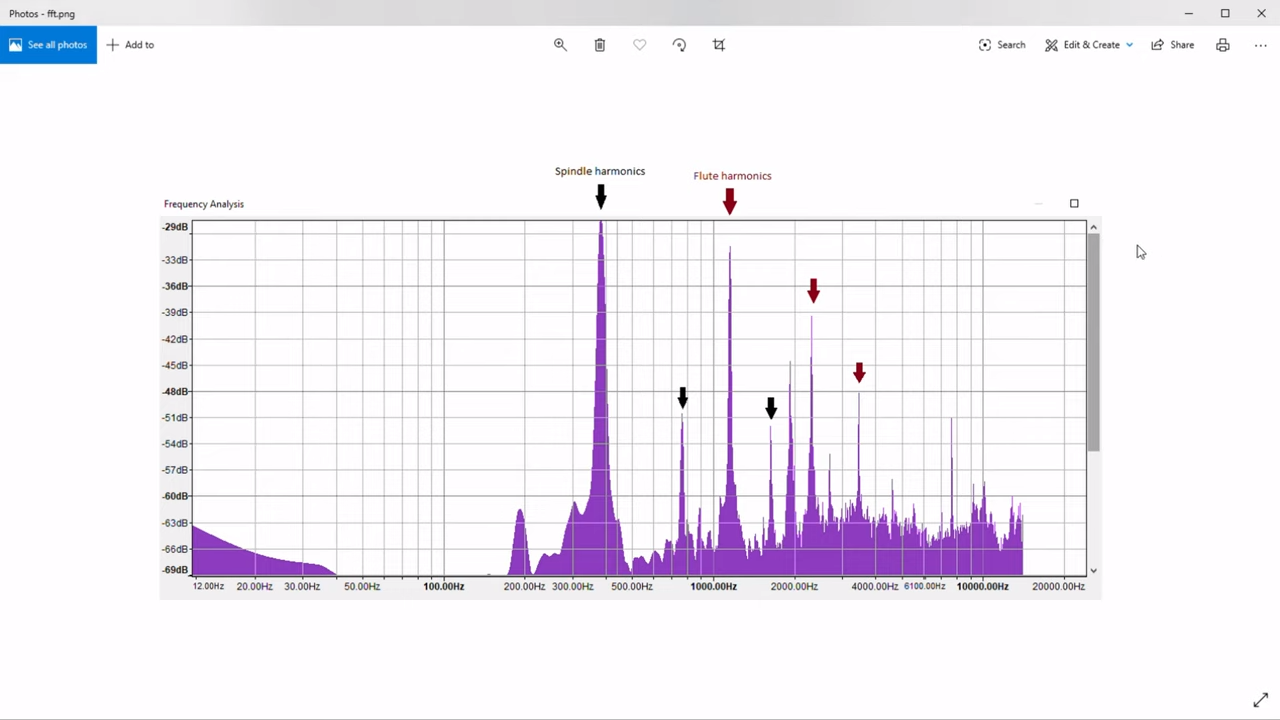

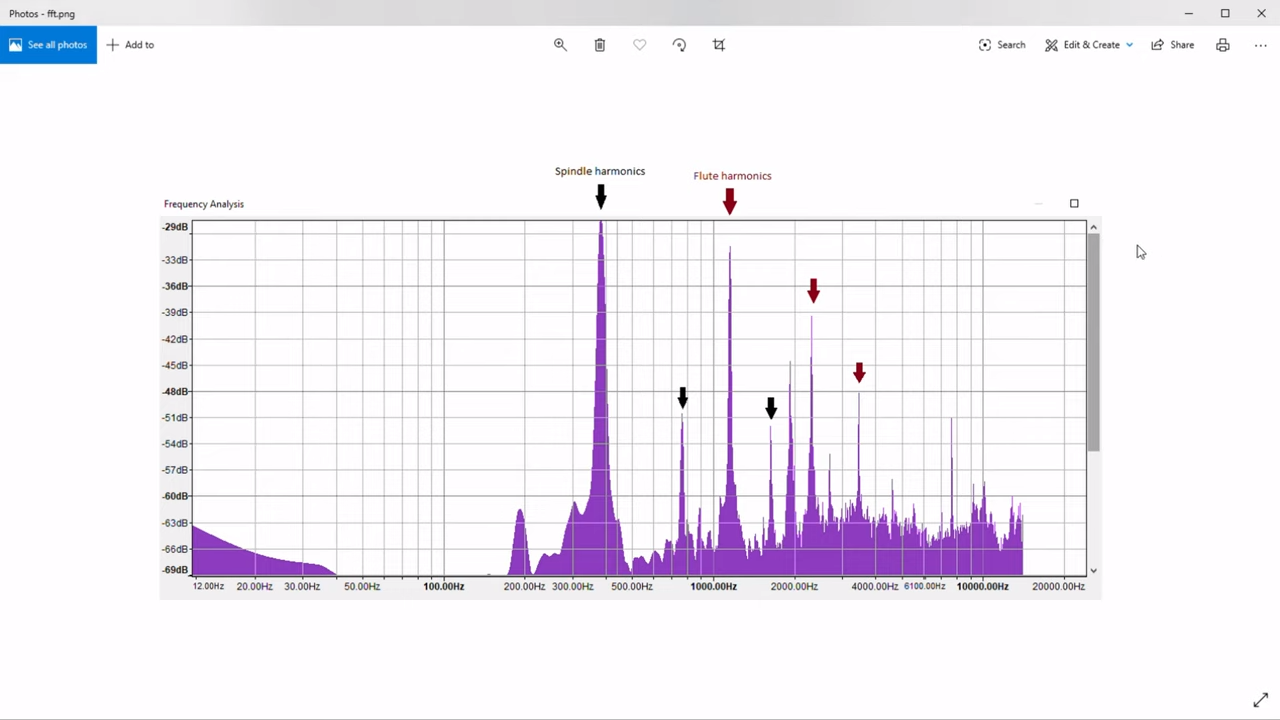

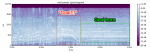

The analysis begins at about the 5:13 mark in the video below, where [Zach] fed the soundtrack of his video into Audacity. Switching from waveform to spectrogram mode, he was able to identify a strong signal at about 5,000 Hz, corresponding to the spindle coming up to speed. The white noise of the mist cooling system was clearly visible too, as were harmonic vibrations up and down the spectrum. Most interesting, though, was the slight dip in frequency during the cut, indicating loading on the spindle. [Zach] then analyzed the data from the cut in the frequency domain and found the expected spindle harmonics, as well the harmonics from the three flutes on the tool. Mixed in among these were spikes indicating chatter – nothing major, but still enough to measure.

The analysis begins at about the 5:13 mark in the video below, where [Zach] fed the soundtrack of his video into Audacity. Switching from waveform to spectrogram mode, he was able to identify a strong signal at about 5,000 Hz, corresponding to the spindle coming up to speed. The white noise of the mist cooling system was clearly visible too, as were harmonic vibrations up and down the spectrum. Most interesting, though, was the slight dip in frequency during the cut, indicating loading on the spindle. [Zach] then analyzed the data from the cut in the frequency domain and found the expected spindle harmonics, as well the harmonics from the three flutes on the tool. Mixed in among these were spikes indicating chatter – nothing major, but still enough to measure.

Audacity has turned out to be an incredibly useful tool with a broad range of applications. Whether it be finding bats, dumping ROMs, detecting lightning strikes, or cloning remote controls, Audacity is often the hacker’s tool of choice.

Continue reading “Analyzing CNC Tool Chatter With Audacity” →

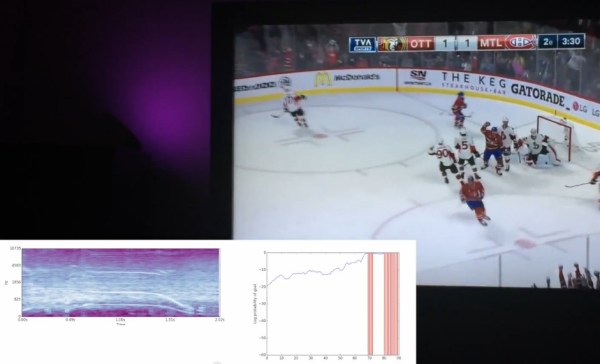

[François] started off by listening to the audio of some recorded games. Whenever a goal is scored, the commentator yells out and the goal horn is sounded. This makes it pretty obvious to the listener that a goal has been scored, but detecting it with a computer is a bit harder. [François] also wanted to detect when his home team scored a goal, but not when the opposing team scored, making the problem even more complicated!

[François] started off by listening to the audio of some recorded games. Whenever a goal is scored, the commentator yells out and the goal horn is sounded. This makes it pretty obvious to the listener that a goal has been scored, but detecting it with a computer is a bit harder. [François] also wanted to detect when his home team scored a goal, but not when the opposing team scored, making the problem even more complicated!