Why build a telescope? YOLO, as the kids say. Having decided that, one must decide what type of far-seer one will construct. For his 10″ reflector, [Carl Anderson] once again said “Yolo”— this time not as a slogan, but in reference to a little-known type of reflecting telescope.

The Yolo-pattern telescope was proposed by [Art Leonard] back in the 1960s, and was apparently named for a county in California. It differs from the standard Newtonian reflector in that it uses two concave spherical mirrors of very long radius to produce a light path with no obstructions. (This differs from the similar Schiefspiegler that uses a convex secondary.) The Yolo never caught on, in part because of the need to stretch the primary mirror in a warping rig to correct for coma and astigmatism.

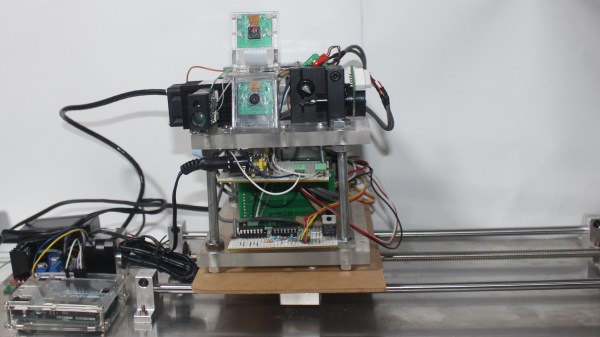

[Carl] doesn’t bother with that, instead using modern techniques to precisely calculate and grind the required toric profile into the mirror. Grinding and polishing was done on motorized jigs [Carl] built, save for the very final polishing. (A quick demo video of the polishing machine is embedded below.)

The body of the telescope is a wooden truss, sheathed in plywood. Three-point mirror mounts alowed for the final adjustment. [Carl] seems to prefer observing by eye to astrophotography, as there are no photos through the telescope. Of course, an astrophotographer probably would not have built an F/15 (yes, fifteen) telescope to begin with. The view through the eyepiece on the rear end must be astounding.

If you’re inspired to spend your one life scratch-building a telescope, but want something more conventional, check out this comprehensive guide. You can go bit more modern with 3D printed parts, but you probably don’t want to try spin-casting resin mirrors. Or maybe you do: YOLO!

Continue reading “A 10″ Telescope, Because You Only Live Once”