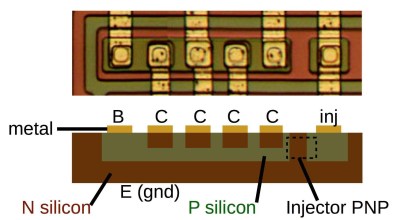

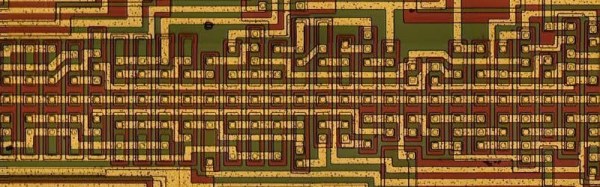

It must be everyone’s birthday today because [Ken Shirriff] has come out with a gift for us. He’s done another pass at reverse engineering the 76477 Space Invaders sound chip from the 1970s and found it’s full of integrated injection logic (I2L), making it a double treat: we get to explore the more of this chip which made sounds for so many of our favorite games, and we explore a type of logic which was to be the successor to TTL until CMOS came along.

This article has a similar shape to his last one, first introducing I2L, followed by showing us what it looks like on the die, and then covering the different functional elements which make heavy use of it. The first of these is the noise generator made up of a section of shift registers and a ring oscillator. That’s followed by a noise filter which doesn’t use I2L but does use current mirrors. And lastly, he talks about the mixer which mixes output from the noise generator and elements covered in his previous article, the voltage-controlled oscillator, and the super-low frequency oscillator. Oddly enough, and as he points out, it isn’t an analog mixer. Instead, it just ANDs together the various inputs.

[Ken’s] no stranger to putting dies under the microscope. Check out our coverage of his talk at the 2016 Hackaday SuperConference where he shows us the guts of such favorites as the Z80 and the 555 timer IC.

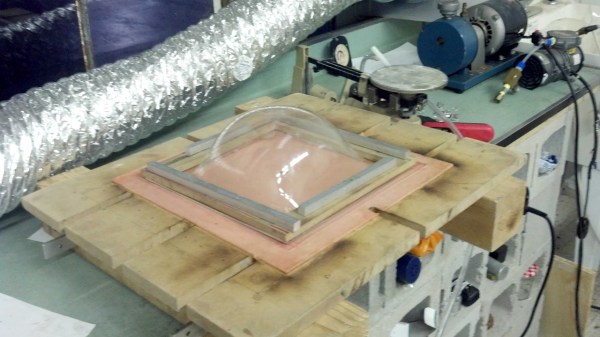

All you need is a way to heat the plastic, to then clamp it down around the edges, and finally to blow air into it as you would when blowing up a balloon. Of course, there are things to watch out for such as making sure the plastic is heated evenly and letting it cool slowly afterward but he covers all that on his hackaday.io page.

All you need is a way to heat the plastic, to then clamp it down around the edges, and finally to blow air into it as you would when blowing up a balloon. Of course, there are things to watch out for such as making sure the plastic is heated evenly and letting it cool slowly afterward but he covers all that on his hackaday.io page.

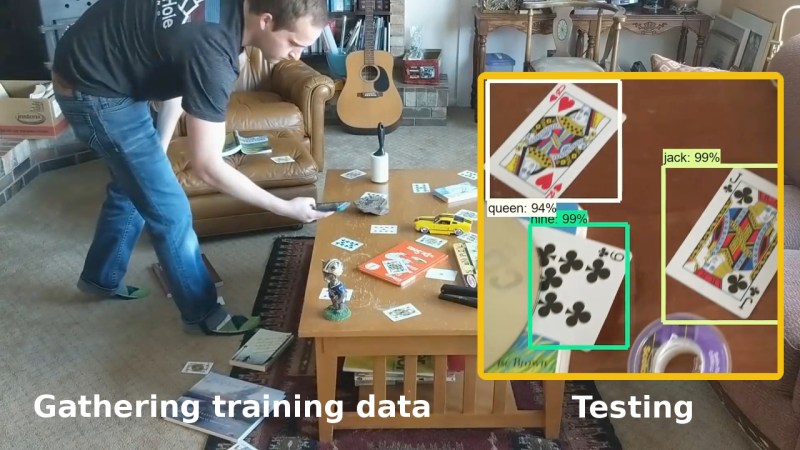

You’ll need a few hundred images of your objects. These can either be scraped from an online source like Google’s images or you get take your own photos. If you use the latter approach, make sure to shoot from various angles, rotations, and with different lighting conditions. Fill your background with various other things and even have some things partially obscuring your objects. This may sound like a long, tedious task, but it can be done efficiently. [Edje Electronics] is working on recognizing playing cards so he first sprinkled them around his living room, added some clutter, and walked around, taking pictures using his phone. Once uploaded, some easy-to-use software helped him to label them all in around an hour. Note that he trained on 24 different objects, which are the number of different cards you get in a

You’ll need a few hundred images of your objects. These can either be scraped from an online source like Google’s images or you get take your own photos. If you use the latter approach, make sure to shoot from various angles, rotations, and with different lighting conditions. Fill your background with various other things and even have some things partially obscuring your objects. This may sound like a long, tedious task, but it can be done efficiently. [Edje Electronics] is working on recognizing playing cards so he first sprinkled them around his living room, added some clutter, and walked around, taking pictures using his phone. Once uploaded, some easy-to-use software helped him to label them all in around an hour. Note that he trained on 24 different objects, which are the number of different cards you get in a