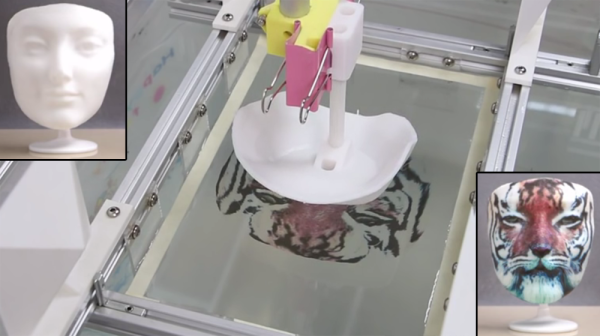

Hydrographic Printing is a technique of transferring colored inks on a film to the surface of an object. The film is placed on water and activated with a chemical that allows it to adhere to an object being physically pushed onto it. Researchers at Zhejiang University and Columbia University have taken hydrographic printing to the next level (pdf link). In a technical paper to be presented at ACM SIGGRAPH 2015 in August, they explain how they developed a computational method to create complex patterns that are precisely aligned to the object.

Typically, repetitive patterns are used because the object stretches the adhesive film; anything complex would distort during this subjective process. It’s commonly used to decorate car parts, especially rims and grills. If you’ve ever seen a carbon-fiber pattern without the actual fiber, it’s probably been applied with hydrographic printing.

The physical setup for this hack is fairly simple: a vat of water, a linear motor attached to a gripper, and a Kinect. The object is attached to the gripper. The Kinect measures its location and orientation. This data is applied to a 3D-scan of the object along with the desired texture map to be printed onto it. A program creates a virtual simulation of the printing process, outputting a specific pattern onto the film that accounts for the warping inherent to the process. The pattern is then printed onto the film using an ordinary inkjet printer.

The physical setup for this hack is fairly simple: a vat of water, a linear motor attached to a gripper, and a Kinect. The object is attached to the gripper. The Kinect measures its location and orientation. This data is applied to a 3D-scan of the object along with the desired texture map to be printed onto it. A program creates a virtual simulation of the printing process, outputting a specific pattern onto the film that accounts for the warping inherent to the process. The pattern is then printed onto the film using an ordinary inkjet printer.

The tiger mask is our personal favorite, along with the leopard cat. They illustrate just how complex the surface patterns can get using single or multiple immersions, respectively. This system also accounts for objects of a variety of shapes and sizes, though the researchers admit there is a physical limit to how concave the parts of an object can be. Colors will fade or the film will split if stretched too thin. Texture mapping can now be physically realized in a simple yet effective way, with amazing results.

Continue reading “Printing Photorealistic Images On 3D Objects”