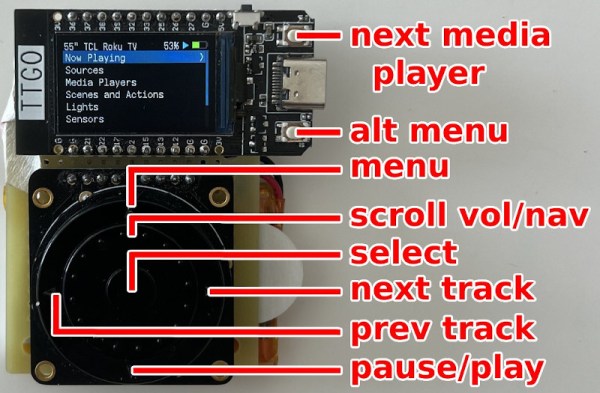

You can achieve a lot with a Dremel. For instance, apparently you can slim the original NES down into the hand-held form-factor. Both the CPU and the PPU (Picture Processing Unit) are 40-pin DIP chips, which makes NES minification a bit tricky. [Redherring32] wasn’t one to be stopped by this, however, and turned these DIP chips into QFN-style-mounted dies (Nitter) using little more than a Dremel cutting wheel. Why? To bring his TinyTendo handheld game console project to fruition, of course.

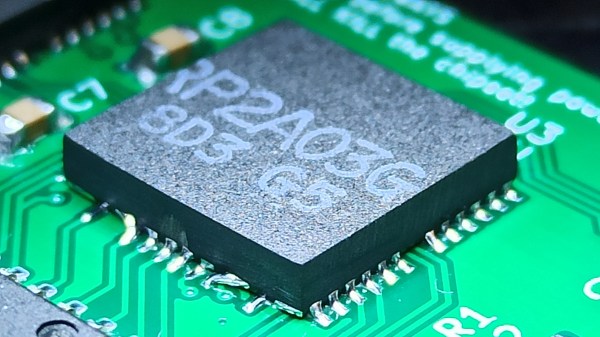

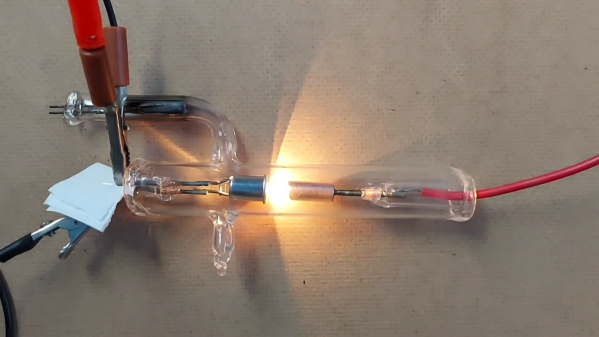

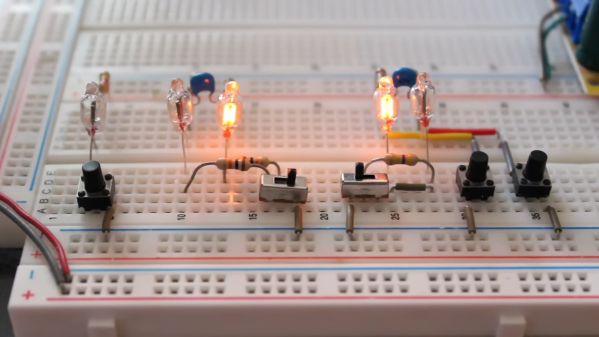

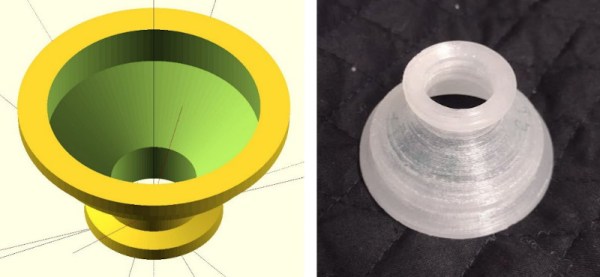

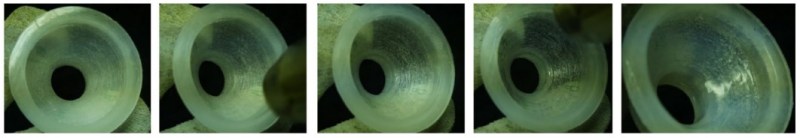

DIP chip contacts go out from the die using a web of metal pins called the leadframe. [Redherring32] cuts into that leadframe and leaves only the useful part of the chip on, with the leadframe pieces remaining as QFN-like contact pads. Then, the chip is mounted onto a tailored footprint on the TinyTendo PCB, connected to all the other components that are, thankfully, possible to acquire in SMD form nowadays.

This trick works consistently, and we’re no doubt going to see the TinyTendo being released as a standalone project soon. Just a year ago, we saw [Redherring32] cut into these chips, and wondered what the purpose could’ve been. Now, we know: it’s a logical continuation of his OpenTendo project, a mainboard reverse-engineering and redesign of the original NES, an effort no doubt appreciated by many a NES enthusiast out there. Usually, people don’t cut the actual chips down to a small size – instead, they cut into the mainboards in a practice called ‘trimming’, and this practice has brought us many miniature original-hardware-based game console builds over these years.

Continue reading “Making A Handheld NES By Turning DIP Chips Into…QFN?”