Back in January when we announced the Train All the Things contest, we weren’t sure what kind of entries we’d see. Machine learning is a huge and rapidly evolving field, after all, and the traditional barriers that computationally intensive processes face have been falling just as rapidly. Constraints are fading away, and we want you to explore this wild new world and show us what you come up with.

Where Do You Run Your Algorithms?

To give your effort a little structure, we’ve come up with four broad categories:

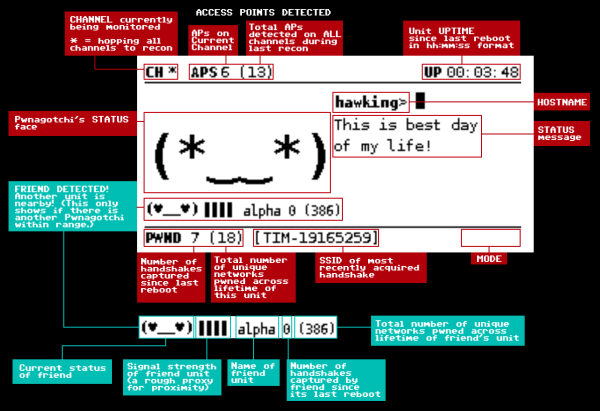

- Machine Learning on the Edge

- Edge computing, where systems reach out to cloud resources but run locally, is all the rage. It allows you to leverage the power of

other people’s computersthe cloud for training a model, which is then executed locally. Edge computing is a great way to keep your data local.

- Edge computing, where systems reach out to cloud resources but run locally, is all the rage. It allows you to leverage the power of

- Machine Learning on the Gateway

- Pi’s, old routers, what-have-yous – we’ve all got a bunch of devices laying around that bridge space between your local world and the cloud. What can you come up with that takes advantage of this unique computing environment?

- Machine Learning in the Cloud

- Forget about subtle — this category unleashes the power of the cloud for your application. Whether it’s Google, Azure, or AWS, show us what you can do with all that raw horsepower at your disposal.

- Artificial Intelligence Blinky

- Everyone’s “hardware ‘Hello, world'” is blinking an LED, and this is the machine learning version of that. We want you to use a simple microprocessor to run a machine learning algorithm. Amaze us with what you can make an Arduino do.

These Hackers Trained Their Projects, You Should Too!

We’re a little more than a month into the contest. We’ve seen some interesting entries bit of course we’re hungry for more! Here are a few that have caught our eye so far:

- Intelligent Bat Detector – [Tegwyn☠Twmffat] has bats in his… backyard, so he built this Jetson Nano-powered device to capture their calls and classify them by species. It’s a fascinating adventure at the intersection of biology and machine learning.

- Blackjack Robot – RAIN MAN 2.0 is [Evan Juras]’ cure for the casino adage of “The house always wins.” We wouldn’t try taking the Raspberry Pi card counter to Vegas, but it’s a great example of what YOLO can do.

- AI-enabled Glasses – AI meets AR in ShAIdes, [Nick Bild]’s sunglasses equipped with a camera and Nano to provide a user interface to the world. Wave your hand over a lamp and it turns off. Brilliant!

You’ve got till noon Pacific time on April 7, 2020 to get your entry in, and four winners from each of the four categories will be awarded a $100 Tindie gift card, courtesy of our sponsor Digi-Key. It’s time to ramp up your machine learning efforts and get a project entered! We’d love to see more examples of straight cloud AI applications, and the AI blinky category remains wide open at this point. Get in there and give machine learning a try!