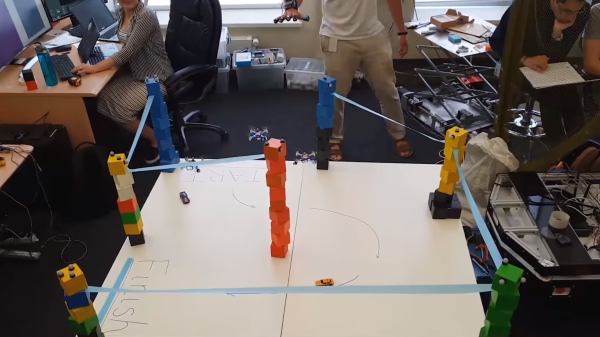

Controlling a single drone takes up a considerable amount of concentration and normally involves wearing silly goggles. It only gets harder if you want to control a swarm. Researchers at Skolkovo Institute of Technology decided Jedi mind tricks were the best way, and set up swarm control using hand gestures.

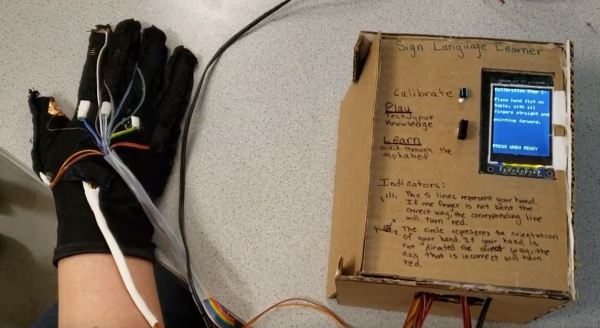

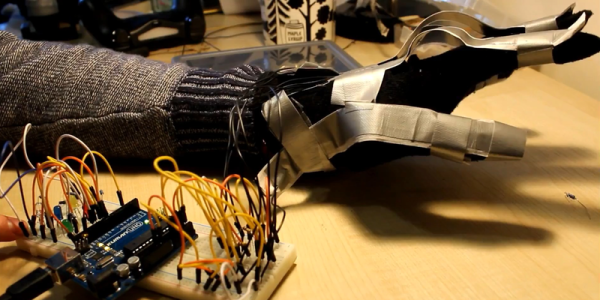

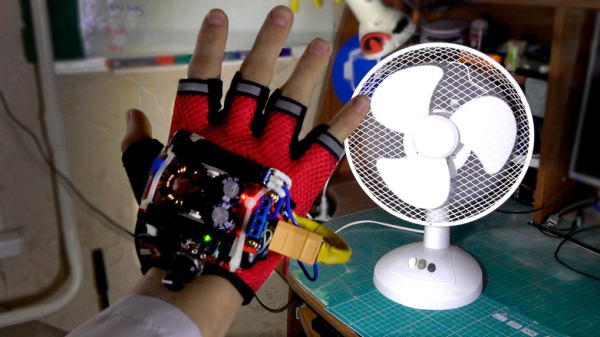

We’ve seen something similar at the Intel Booth of the 2016 Makerfaire. In that demo, a single drone was controlled by hand gesture using a hacked Nintendo Power Glove. The Skoltech approach has a lot of innovation building on that concept. For one, haptics in the finger tips of the glove provide feedback from the current behavior of the drones. Through their research they found that most operators quickly learned to interpret the vibrations subconsciously.

It also increased the safety of the swarm, which is a prime factor in making these technologies usable outside of the lab. Most of us have at one point frantically typed commands into a terminal or pulled cords to keep a project from destroying itself or behaving dangerously. Having an intuitive control means that an operator can react quickly to changes in the swarm behavior.

The biggest advantage, which can be seen in the video after the break, is that the hand control eliminates much of the preprogramming of paths that is currently common in swarm robotics. With tech like this we can imagine a person quickly being trained on drone swarms and then using them to do things like construction surveys with ease. As an added bonus the researchers were nice enough to pre-submit their paper to arxiv if any readers would like to get into the specifics.

Continue reading “Use Jedi Mind Tricks To Control Your Next Drone Swarm”