Before the Internet became the advertisement generator we know and love today, interspersed with interesting information here and there, it was originally a network of computers largely among various universities. This was even before the world-wide web and HTML which means that the people using these proto-networks, mostly researchers and other academics, had to build things we might take for granted from the ground up. One of those was one of the first search engines, built by the librarians who were cataloging all of the research in their universities, and using their relatively primitive computer networks to store and retrieve all of this information.

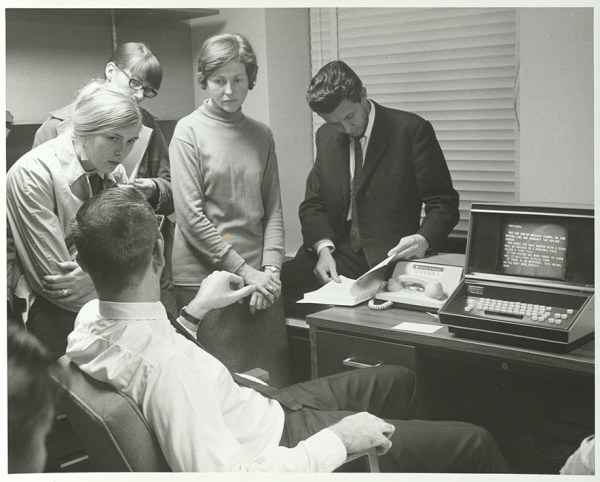

This search engine was called SUPARS, the Syracuse University Psychological Abstracts Retrieval Service. It was originally built for psychology research papers, and perhaps unsurprisingly the psychologists at the university also used this new system as the basis for understanding how humans would interact with computers. This was the 1970s after all, and most people had never used a computer, so documenting how they used search engine led to some important breakthroughs in the way we think about the best ways of designing systems like these.

The search engine was technically revolutionary for the time as well. It was among the first to allow text to be searched within documents and saved previous searches for users and researchers to access and learn from. The experiment was driven by the need to support researchers in a future where reference librarians would need assistance dealing with more and more information in their libraries, and it highlighted the challenges of vocabulary control in free-text searching.

The visionaries behind SUPARS recognized the changing landscape of research and designed for the future that would rely on networked computer systems. Their contributions expanded the understanding of how technology could shape human communication and effectiveness, and while they might not have imagined the world we are currently in, they certainly paved the way for the advances that led to its widespread adoption even outside a university setting. There were some false starts along that path, though.