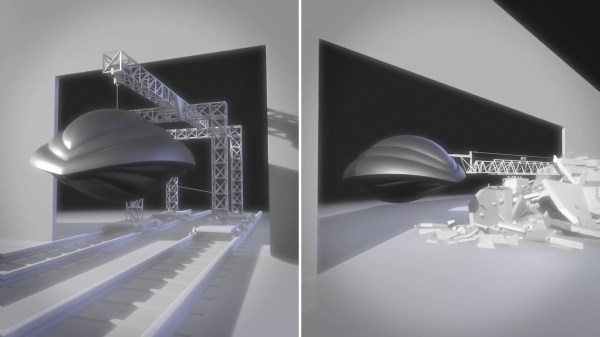

[Captain Disillusion] has earned a reputation on YouTube for debunking hoaxes and spreading a healthy sense of skepticism while having some of the highest production value on the platform and pretending to be some kind of inter-dimensional superhero. You’ve likely seen him give a careful explanation of how some viral video was faked alongside a generous dose of sarcastic humor and his own impressive visual effects. VFXcool is a series on his channel that takes deep dives into movies that are historically significant in the effects industry. For this installment, [Captain Disillusion]’s “intern”, [Alan], takes over to breakdown how filmmakers brought a futuristic spaceship to life in 1986’s Flight of the Navigator.

Making a movie requires hacks upon hacks, and that goes double in the era when the technology and techniques we now take for granted were being developed even as they were being put to film. The range of topics covered here is extreme: from full-scale props to models; from robotic motion control rigs to stop motion animation; from early computer graphics to the convoluted optical compositing that was necessary before digital workflows were possible. The tools themselves may be outdated, but understanding the history and the processes allows for a deeper insight into how we accomplish these kinds of effects today. And, really, it’s just so… cool.

[Captain Disillusion]’s previous VFXcool is all about the Back to the Future trilogy, and it’s a little shorter with more information on motion control rigs. We also love seeing how people make DIY effects in their own homes. LEGO actually seems like a pretty popular option for putting together whole scenes in amateur filmmaking.

Continue reading “Obsessively Explaining The Visual Effects In Flight Of The Navigator“