How do I get the data off this destroyed phone? It’s a question many of us have had to ponder – either ourselves or for friends or family. The easy answer is either spend a mint for a recovery service or consider it lost forever. [Trochilidae] didn’t accept either of those options, so he broke out the soldering iron and rescued his own data.

A moment’s inattention with a child near a paddling pool left [Trochilidae’s] coworker’s wife with a waterlogged, dead phone. She immediately took apart the phone and attempted to dry it out, but it was too late. The phone was a goner. It also had four months of photos and other priceless data on it. [Trochilidae] was brought in to try to recover the data.

The phone was dead, but chances are the data stored within it was fine. Most devices built in the last few years use eMMC flash devices as their secondary storage. eMMC stands for Embedded Multimedia Card. What it means is that the device not only holds the flash memory array, it also contains a flash controller which handles wear leveling, flash writing, and host interface. The controller can be configured to respond exactly like a standard SD card.

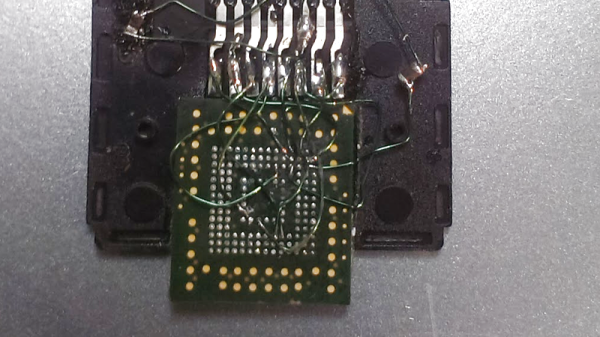

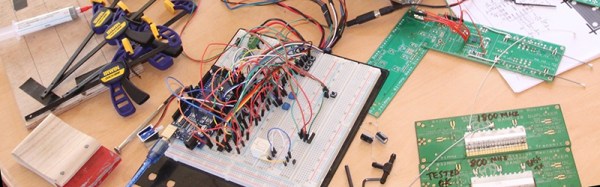

The hard part is getting a tiny 153 ball BGA package to fit into an SD card slot. [Trochilidae] accomplished that by cutting open a microSD to SD adapter. He then carefully soldered the balls from the eMMC to the pins of the adapter. Thin gauge wire, a fine tip iron, and a microscope are essentials here. Once the physical connections were made, [Trochilidae] plugged the card into his Linux machine. The card was recognized, and he managed to pull all the data off with a single dd command.

[Trochilidae] doesn’t say what happened after the data was copied, but we’re guessing he analyzed the dump to determine the filesystem, then mounted it as a drive. The end result was a ton of recovered photos and a very happy coworker.

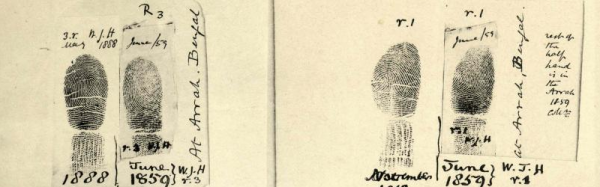

If you like crazy soldering exploits, check out this PSP reverse engineering hack, where every pin of a BGA was soldered to magnet wire.