Flying a quadcopter or other drone can be pretty exciting, especially when using the video signal to do the flying. It’s almost like a real-life video game or flight simulator in a way, except the aircraft is physically real. To bring this experience even closer to the reality of flying, [Kevin] implemented stereo vision on his quadcopter which also adds an impressive amount of functionality to his drone.

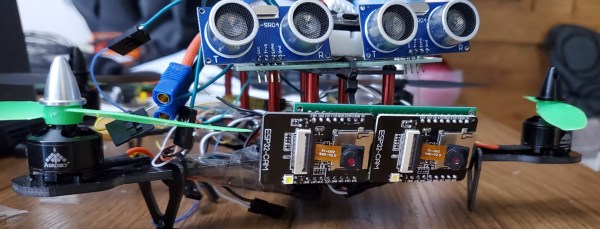

While he doesn’t use this particular setup for drone racing or virtual reality, there are some other interesting things that [Kevin] is able to do with it. The cameras, both ESP32 camera modules, can make use of their combined stereo vision capability to determine distances to objects. By leveraging cloud computing services from Amazon to offload some of the processing demands, the quadcopter is able to recognize faces and keep the drone flying at a fixed distance from that face without needing power-hungry computing onboard.

There are a lot of other abilities that this drone unlocks by offloading its resource-hungry tasks to the cloud. It can be flown by using a smartphone or tablet, and has its own web client where its user can observe the facial recognition being performed. Presumably it wouldn’t be too difficult to use this drone for other tasks where having stereoscopic vision is a requirement.

Thanks to [Ilya Mikhelson], a professor at Northwestern University, for this tip about a student’s project.