Modern processors come with all kinds of power management features, which you don’t typically notice as a user until you start a heavy program and hear the CPU fan spin up. Back in the early 1990s however, power management was largely unheard of, meaning that a CPU with nothing to do would run through an idle loop that dissipated about as much power as a real computing task. [Michal Necasek] noticed this while experimenting with DR-DOS 6.0 in a virtual machine – his laptop fan would start running on full blast whenever he opened the VM. His search for a solution to this annoyance led him down a fascinating journey into the intricacies of DOS power management.

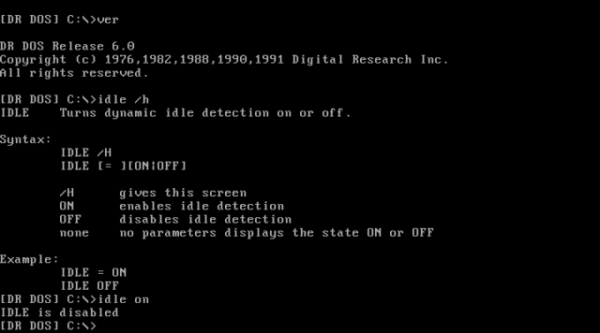

As it turned out, DR-DOS 6.0 does have functionality built in for putting the CPU in power saving mode when it’s idle. This feature is not complete, however: Digital Research required each computer manufacturer to develop an IDLE driver customized to their specific hardware platform in order to enable power management. Sadly, no manufacturer ever bothered to do so, leaving [Michal] with no option other than writing a driver himself. While there was some documentation available, it didn’t include any example code or sufficient detail to write a driver from scratch.

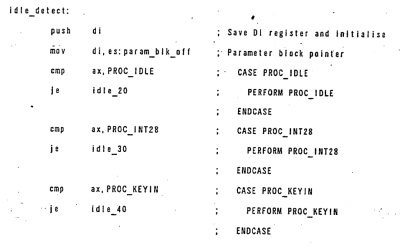

What it did include was a reference to U.S. Patent No. 5,355,501. Normally this sort of information is of interest only to those planning to sell a competing system, but this specific patent happens to include dozens of pages of well-documented but poorly-scanned x86 assembly code, including source code for a basic

What it did include was a reference to U.S. Patent No. 5,355,501. Normally this sort of information is of interest only to those planning to sell a competing system, but this specific patent happens to include dozens of pages of well-documented but poorly-scanned x86 assembly code, including source code for a basic IDLE86.SYS driver. As [Michal] wasn’t looking forward to chasing bugs caused by OCR errors, he simply copied the source code by hand, then ran it through an assembler. The end result was a working IDLE driver, which is now available for download from his website.

[Michal]’s blog post also includes lots of details on early power saving implementations, including all the DOS interrupt calls involved in the process. Patents might seem boring in contrast, but they sometimes contain surprising amounts of usable information. You might find enough details to reverse-engineer a wireless protocol, or even to help track down an obscure instrument’s original designer.