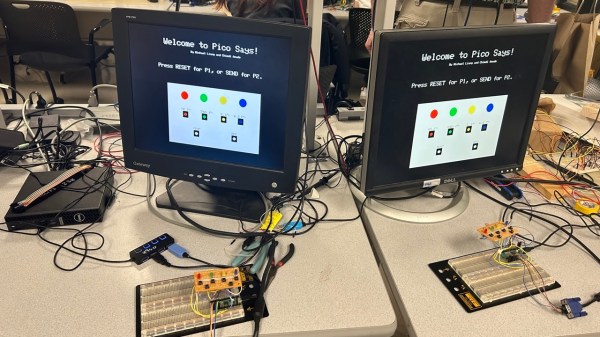

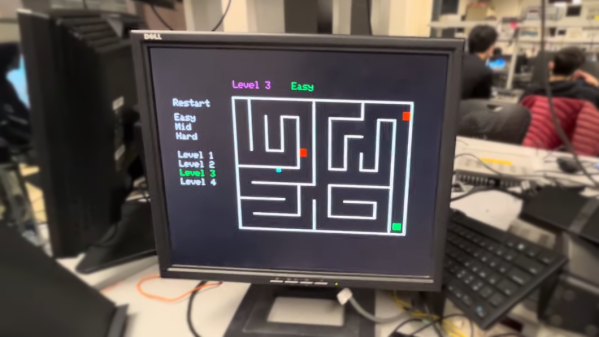

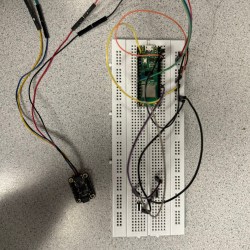

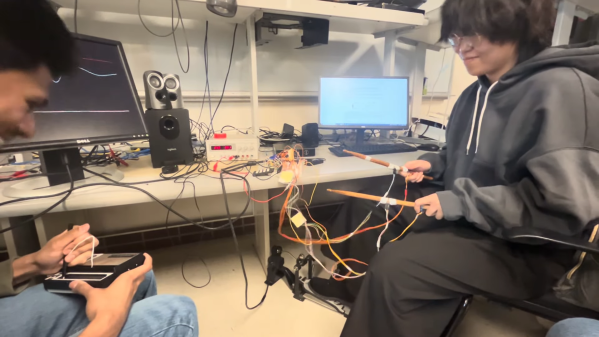

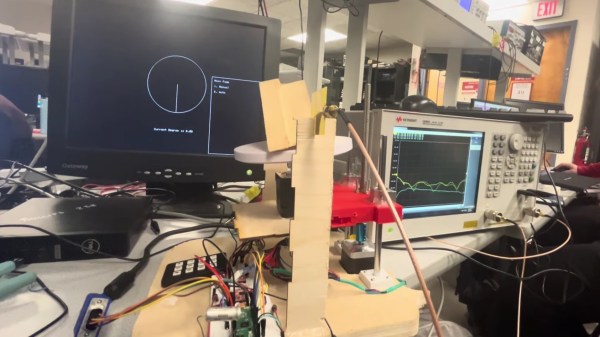

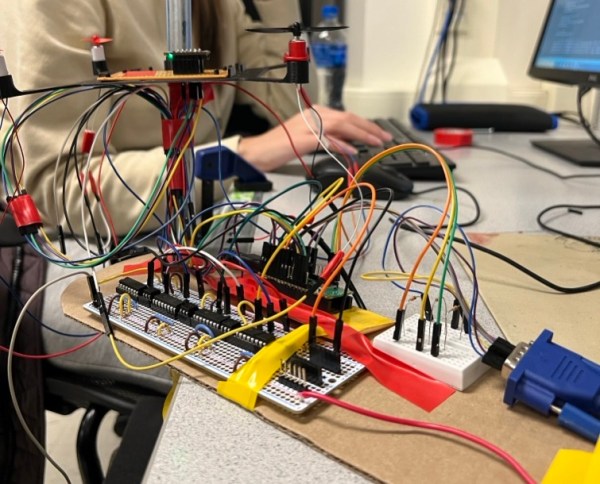

The team of [Michael] and [Chimdi] from Cornell’s Designing with Microcontrollers (ECE 4760) Fall 2023 session designed a version of Simon Says on an RP2040 which they call Pico Says. It uses UDP packets over WiFi to communicate between the players, and supports VGA graphics for output. Each player’s hardware consists of a Pico W module plus a control panel containing the four LEDs and buttons ( red, green, yellow, and blue ) plus send and reset buttons.

For purposes of this lab, the modules were build on a solderless breadboard and used perfboard for the control panels. They weren’t entirely happy with their choice of UDP because they experienced frequent datagram dropouts in the noisy environment of the microcontroller lab. They also planned to implement sound effects, but ran out of time after spending too much time on the WiFi implementation, and had to drop that feature. In the end, however, they wrapped up their project and demonstrated a working game. We can only speculate whether this bonus lesson in resource management was intended by [Dr. Hunter Adams] or not.

Two ECE 4760 course references are highlighted in the write-up that helped them jump-start the project: the UDP and VGA examples for the Pico. These are good links to put in your RP2020 toolbox for future projects, in addition to the ECE 4760 course home page itself. We’ve covered several of these projects recently, as well as the curriculum switch from the Microchip PIC32MX-based Microstick II to the RP2040 last Spring.