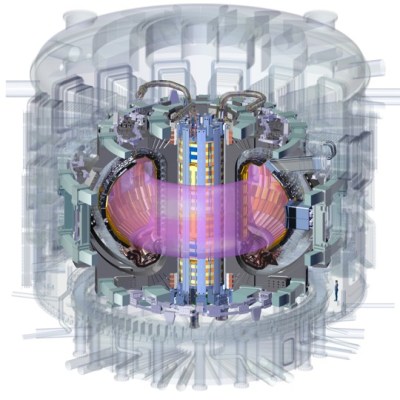

What’s sixty feet (18.29 meters for the rest of the world) across and superconducting? The International Thermonuclear Experimental Reactor (ITER), and probably not much else.

The last parts of the central solenoid assembly have finally made their way to France from the United States, making both a milestone in the slow development of the world’s largest tokamak, and a reminder that despite the current international turmoil, we really can work together, even if we can’t agree on the units to do it in.

The central solenoid is 4.13 m across (that’s 13′ 7″ for burger enthusiasts) sits at the hole of the “doughnut” of the toroidal reactor. It is made up of six modules, each weighing 110 t (the weight of 44 Ford F-150 pickup trucks), stacked to a total height of 59 ft (that’s 18 m, if you prefer). Four of the six modules have been installed on-site, and the other two will be in place by the end of this year.

Each module was produced ITER by US, using superconducting material produced by ITER Japan, before being shipped for installation at the main ITER site in France — all to build a reactor based on a design from the Soviet Union. It doesn’t get much more international than this!

This magnet is, well, central to the functioning of a tokamak. Indeed, the presence of a central solenoid is one of the defining features of this type, compared to other toroidal rectors (like the earlier stellarator or spheromak). The central solenoid provides a strong magnetic field (in ITER, 13.1 T) that is key to confining and stabilizing the plasma in a tokamak, and inducing the 15 MA current that keeps the plasma going.

When it is eventually finished (now scheduled for initial operations in 2035) ITER aims to produce 500 MW of thermal power from 50 MW of input heating power via a deuterium-tritium fusion reaction. You can follow all news about the project here.

While a tokamak isn’t likely something you can hack together in your back yard, there’s always the Farnsworth Fusor, which you can even built to fit on your desk.