Once upon a time, we would run home from the bus stop to watch Gargoyles and Brady Bunch reruns on the family TV, a late-1970s console Magnavox number that sat on the floor and was about 50% more cabinet than CRT. The old TV, a streamlined white Zenith at least ten years older, had been relegated to the man cave in the basement. It looked so mod compared to the “new” TV, but that’s not the aesthetic my folks were after. They wanted their electronics to double as furniture.

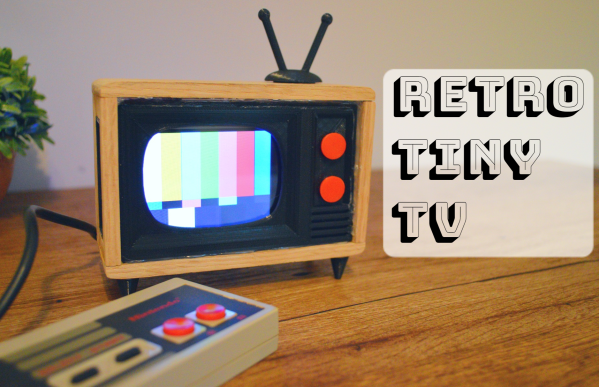

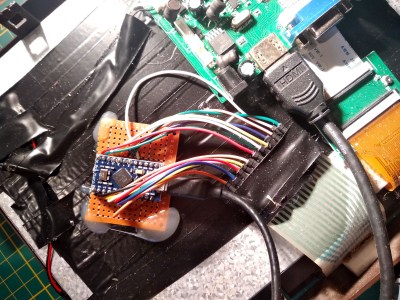

This little TV is a happy medium between the two styles, and for us, it’s all about those feet. But instead of cartoons, it switches between showing the current weather and the top news headlines. Inside that classy oak cabinet is an LCD, an ESP32, and an SD card module. The TV uses OpenWeatherMap and pulls the corresponding weather image from the SD card based on time of day — light images for day, and dark images for night.

We love that it shows the SMPTE color bars, aka the standard American TV test pattern as it switches between weather and news. After showing the top headlines, it automatically switches back to the weather channel. Be sure to check out the short demo video after the break.

Do you like your tiny televisions in strange places? Here’s one you can use to trim your tree this year.