A recent writeup by Tom Nardi about using the 6502-based NES to track satellites brought back memories of my senior project at Georgia Tech back in the early 80s. At our club station W4AQL, I had become interested in Amateur Radio satellites. It was quite a thrill to hear your signal returning from space, adjusting for Doppler as it speeds overhead, keeping the antennas pointed, all while carrying on a brief conversation with other Earth stations or copying spacecraft telemetry, usually in Morse code.

Original Art1219 Articles

Bringing High Temperature 3D Printing To The Masses

Despite the impressive variety of thermoplastics that can be printed on consumer-level desktop 3D printers, the most commonly used filament is polylactic acid (PLA). That’s because it’s not only the cheapest material available, but also the easiest to work with. PLA can be extruded at temperatures as low as 180 °C, and it’s possible to get good results even without a heated bed. The downside is that objects printed in PLA tend to be somewhat brittle and have a low heat tolerance. It’s a fine plastic for prototyping and light duty projects, but it won’t take long for many users to outgrow its capabilities.

The next step up is usually polyethylene terephthalate glycol (PETG). This material isn’t much more difficult to work with than PLA, but is more durable, can handle higher temperatures, and in general is better suited for mechanical parts. If you need greater durability or higher heat tolerance than PETG offers, you could move on to something like acrylonitrile butadiene styrene (ABS), polycarbonate (PC), or nylon. But this is where things start to get tricky. Not only are the extrusion temperatures of these materials greater than 250 °C, but an enclosed print chamber is generally recommended for best results. That puts them on the upper end of what the hobbyist community is generally capable of working with.

But high-end industrial 3D printers can use even stronger plastics such as polyetherimide (PEI) or members of the polyaryletherketone family (PAEK, PEEK, PEKK). Parts made from these materials are especially desirable for aerospace applications, as they can replace metal components while being substantially lighter.

These plastics must be extruded at temperatures approaching 400 °C, and a sealed build chamber kept at >100 °C for the duration of the print is an absolute necessity. The purchase price for a commercial printer with these capabilities is in the tens of thousands even on the low end, with some models priced well into the six figure range.

Of course there was a time, not quite so long ago, where the same could have been said of 3D printers in general. Machines that were once the sole domain of exceptionally well funded R&D labs now sit on the workbenches of hackers and makers all over the world. While it’s hard to say if we’ll see the same race to the bottom for high temperature 3D printers, the first steps towards democratizing the technology are already being made.

Continue reading “Bringing High Temperature 3D Printing To The Masses”

Taking A Crack At The Traveling Salesman Problem

The human mind is a path-planning wizard. Think back to pre-lockdown days when we all ran multiple errands back to back across town. There was always a mental dance in the back of your head to make sense of how you planned the day. It might go something like “first to the bank, then to drop off the dry-cleaning. Since the post office is on the way to the grocery store, I’ll pop by and send that box that’s been sitting in the trunk for a week.”

This sort of mental gymnastics doesn’t come naturally to machines — it’s actually a famous problem in computer science known as the traveling salesman problem. While it is classified in the industry as an NP-hard problem in combinatorial optimization, a more succinct and understandable definition would be: given a list of destinations, what’s the best round-trip route that visits every location?

This summer brought news that the 44-year old record for solving the problem has been broken. Let’s take a look at why this is a hard problem, and how the research team from the University of Washington took a different approach to achieve the speed up.

Continue reading “Taking A Crack At The Traveling Salesman Problem”

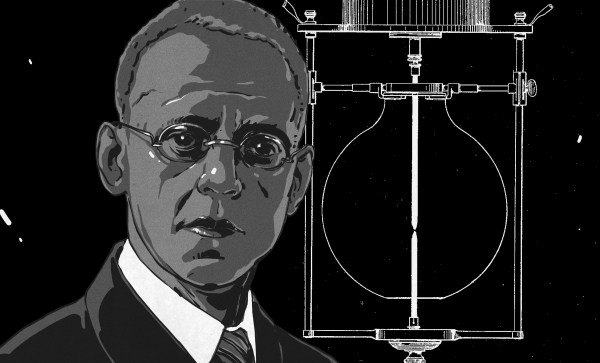

Lewis Latimer Drafted The Future Of Electric Light

These days, we have LED light bulbs that will last a decade. But it wasn’t so long ago that incandescent lamps were all we had, and they burned out after several months. Thomas Edison’s early light bulbs used bamboo filaments that burned out very quickly. An inventor and draftsman named Lewis Latimer improved Edison’s filament by encasing it in cardboard, earning himself a patent the process.

Lewis had a hard early life, but he succeeded in spite of the odds and his lack of formal education. He was a respected draftsman who earned several patents and worked directly with Alexander Graham Bell and Thomas Edison. Although Lewis didn’t invent the light bulb, he definitely made it better and longer-lasting. Continue reading “Lewis Latimer Drafted The Future Of Electric Light”

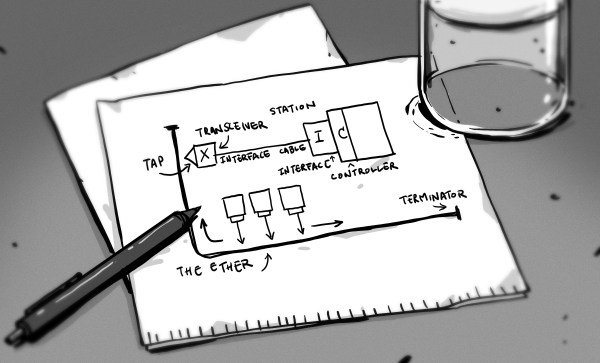

Ethernet At 40: From A Napkin Sketch To Multi-Gigabit Links

September 30th, 1980 is the day when Ethernet was first commercially introduced, making it exactly forty years ago this year. It was first defined in a patent filed by Xerox as a 10 Mb/s networking protocol in 1975, introduced to the market in 1980 and subsequently standardized in 1983 by the IEEE as IEEE 802.3. Over the next thirty-seven years, this standard would see numerous updates and revisions.

Included in the present Ethernet standard are not just the different speed grades from the original 10 Mbit/s to today’s maximum 400 Gb/s speeds, but also the countless changes to the core protocol to enable these ever higher data rates, not to mention new applications of Ethernet such as power delivery and backplane routing. The reliability and cost-effectiveness of Ethernet would result in the 1990 10BASE-T Ethernet standard (802.3i-1990) that gradually found itself implemented on desktop PCs.

With Ethernet these days being as present as the presumed luminiferous aether that it was named after, this seems like a good point to look at what made Ethernet so different from other solutions, and what changes it had to undergo to keep up with the demands of an ever-more interconnected world. Continue reading “Ethernet At 40: From A Napkin Sketch To Multi-Gigabit Links”

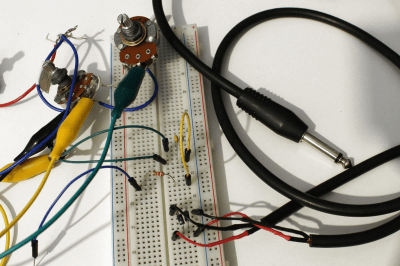

Axe Hacks: Spinning Knobs And Flipping Switches

From a guitar hacking point of view, the two major parts that are interesting to us are the pickups and the volume/tone control circuit that lets you adjust the sound while playing. Today, I’ll get into the latter part and take a close look at the components involved — potentiometers, switches, and a few other passive components — and show how they function, what alternative options we have, and how we can re-purpose them altogether.

In that sense, it’s time to heat up the soldering iron, get out the screwdriver, and take off that pick guard / open up that back cover and continue our quest for new electric guitar sounds. And if the thought of that sounds uncomfortable, skip the soldering iron and grab some alligator clips and a breadboard. It may not be the ideal environment, but it’ll work.

Continue reading “Axe Hacks: Spinning Knobs And Flipping Switches”

Getting Rid Of All The Space Junk In Earth’s Backyard

Space, as the name suggests, is mostly empty. However, since the first satellite launch in 1957, mankind began to populate the Earth orbit with all kinds of spacecraft. On the downside, space also became more and more cluttered with trash from defunct or broken up rocket stages and satellites. Moving at speeds of nearly 30,000 km/h, even the tiniest object can pierce a hole through your spacecraft. Therefore, space junk poses a real threat for both manned and unmanned spacecraft and that is why space agencies are increasing their efforts into tracking, avoiding, and getting rid of it.

Continue reading “Getting Rid Of All The Space Junk In Earth’s Backyard”