With the high availability of low-cost modular electronic components, building your own little robot buddy is easier and more affordable than ever. But while the electronics might be dirt cheap thanks to the economies of scale, modular robot chassis can be surprisingly expensive. If you’ve got a 3D printer you can always make a chassis that way, but what if you’re looking for something a bit more artisanal?

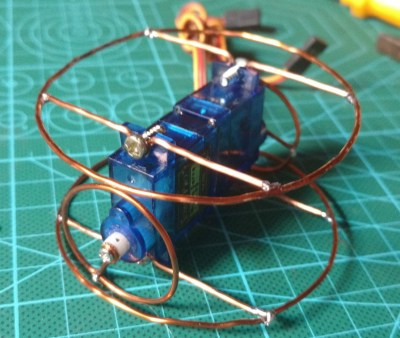

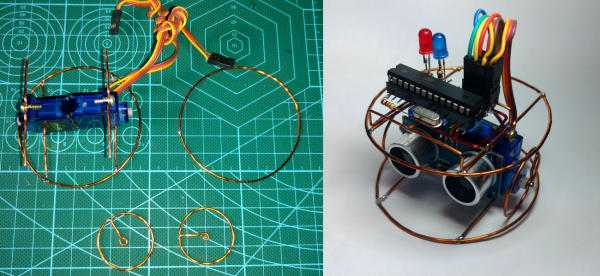

For his entry into the Circuit Sculpture Contest, [Robson Couto] has built a simple robot which dumps the traditional chassis for a frame made out of bent and soldered copper wire. Not only does this happen to look really cool in a Steampunk kind of way, it’s also a very cheap way of knocking together a basic bot with just the parts you have on hand. Not exactly a heavy-duty chassis, to be sure, but certainly robust enough to rove around your workbench.

The dual servos constrained within the wire frame have been modified for continuous rotation, which combined with the narrow track should make for a fairly maneuverable little bot. [Robson] equipped his servos with copper wheels built in the same style of the frame, which likely isn’t great for traction but really does help sell the overall look. If you aren’t planning on entering your creation into a contest that focuses on unique construction, we’d suggest some more traditional wheels for best results.

The dual servos constrained within the wire frame have been modified for continuous rotation, which combined with the narrow track should make for a fairly maneuverable little bot. [Robson] equipped his servos with copper wheels built in the same style of the frame, which likely isn’t great for traction but really does help sell the overall look. If you aren’t planning on entering your creation into a contest that focuses on unique construction, we’d suggest some more traditional wheels for best results.

The brains of this bot are provided by an ATmega8 with external 16MHz crystal tacked onto the pins. There’s also a ultrasonic sensor board mounted to the servos which eventually will give this little fellow the ability to avoid obstacles. Of course, it doesn’t take a robotics expert to realize there’s currently no onboard power supply in the design. We’d love to say that he’s planning on using the copper loops of the frame to power the thing via induction, but we imagine [Robson] is still fiddling around with the best way to get juice into his wireframe creation before the Contest deadline.

Speaking of which, there’s still plenty of time to get your own Circuit Sculpture creation submitted. If it’s a functional device that isn’t scared to show off the goods, we’re interested in seeing it. Just document the project on Hackaday.io and submit it to the contest before the January 8th, 2019 deadline.

What would reality have to look like before a robot should be given legal status similar to that of a human? For that, we can look to fiction.

What would reality have to look like before a robot should be given legal status similar to that of a human? For that, we can look to fiction.