[Travis] tells us about a neat actuator concept that’s as old as dirt. It’s capable of lifting 7kg when powered by a pager motor, and the only real component is a piece of string.

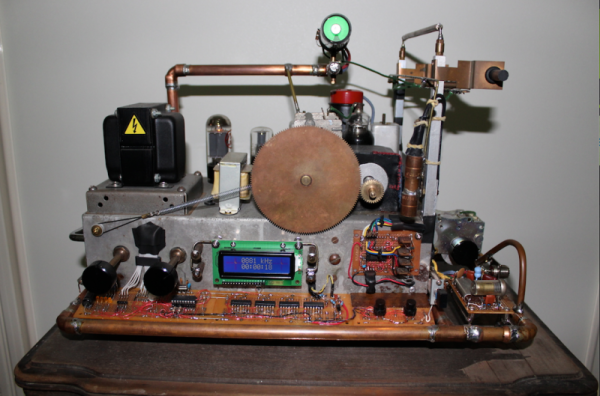

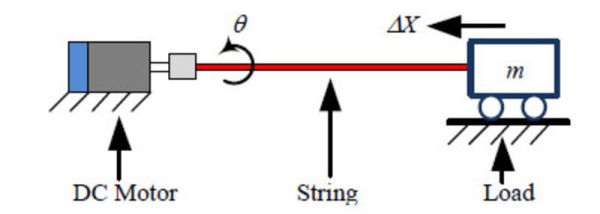

The concept behind the twisted string actuator, as it’s known to academia, is as simple as putting a motor on one end of a piece of string, tying the other end off to a load, and putting a few twists in the string. It’s an amazingly simple concept that has been known and used for thousands of years: ballistas and bow-string fire starters use the same theory.

Although the concept of a twisted string actuator is intuitively known by anyone over the age of six, there aren’t many studies and even fewer projects that use this extremely high gear ratio, low power, and very cheap form of linear motion. A study from 2012 (PDF) put some empirical data behind this simple device. The takeaway from this study is that tension on the string doesn’t matter, and more strands or larger diameter strands means the actuator shrinks with a fewer number of turns. Fewer strands and smaller diameter strands take more turns to shrink to the same length.

As for useful applications of these twisted string actuators, there are a few projects that have used these systems in anthropomorphic hands and elbows. No surprise there, really; strings don’t take up much space, and they work just like muscles and tendons do in the human body.

Thanks [ar0cketman] for the link.

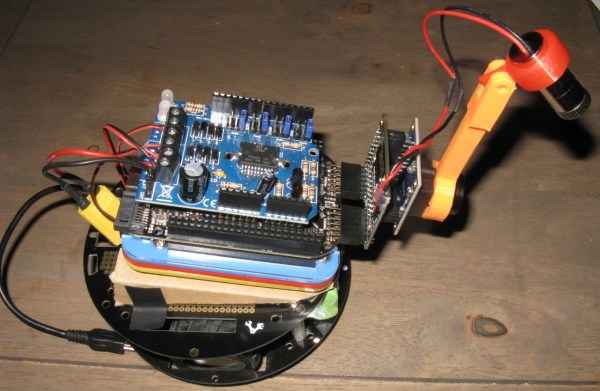

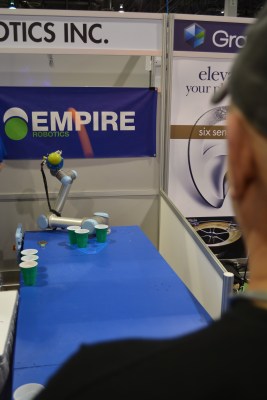

Wandering the aisles of Eureka Park, the startup area of the Consumer Electronics Show, I spotted a mob of people and sauntered over to see what the excitement was all about. Peeking over this gentleman’s shoulder I realized he was getting spanked at Beer Pong… by a robot!

Wandering the aisles of Eureka Park, the startup area of the Consumer Electronics Show, I spotted a mob of people and sauntered over to see what the excitement was all about. Peeking over this gentleman’s shoulder I realized he was getting spanked at Beer Pong… by a robot!