HVAC – heating, ventilation, and air conditioning – can account for a huge amount of energy usage of a building, whether it’s residential or industrial. Often it’s the majority energy consumer, especially in places with extreme climates or for things like data centers where cooling is a large design consideration. When problems arise with these complex systems, they can go undiagnosed for a time and additionally be difficult to fix, leading to even more energy losses until repairs are complete. With the growing availability of platforms that can run capable artificial intelligences, [kutluhan_aktar] is working towards a system that can automatically diagnose potential issues and help humans get a handle on repairs faster.

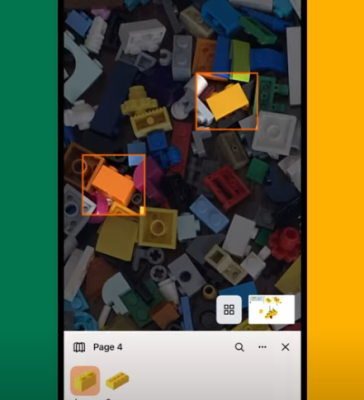

The prototype system is designed for hydronic (water-based) systems and uses two separate artificial intelligences, one to analyze thermal imagery of the system and look for problems like leaks, hot spots, or blockages, and the other to listen for anomalous sounds especially relating to the behavior of cooling fans. For the first, a CNC-like machine was built to move a thermal camera around a custom-built model HVAC system and report its images back to a central system where they can be analyzed for anomalies. The second system which analyses audio runs its artificial intelligence on a XIAO ESP32C6 and listens to the cooling fans running in the model.

One problem that had to be tackled before any of this could be completed was actually building an open-source dataset to train the AI on. That’s part of the reason for the HVAC model in this project; being able to create problems to train the computer to detect before rolling it out to a larger system. The project’s code and training models can be found on its GitHub page. It seems to be a fairly robust solution to this problem, though, and we’ll be looking forward to future versions running on larger systems. Not everyone has a hydronic HVAC system, though. As heat pumps become more and more popular and capable, you’ll need systems to control those as well.