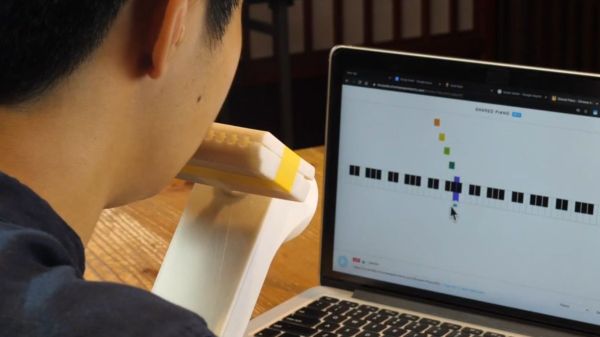

Gesture recognition usually involves some sort of optical system watching your hands, but researchers at UC Berkeley took a different approach. Instead they are monitoring the electrical signals in the forearm that control the muscles, and creating a machine learning model to recognize hand gestures.

The sensor system is a flexible PET armband with 64 electrodes screen printed onto it in silver conductive ink, attached to a standalone AI processing module. Since everyone’s arm is slightly different, the system needs to be trained for a specific user, but that also means that the specific electrical signals don’t have to be isolated as it learns to recognize patterns.

The challenging part of this is that the patterns don’t remain constant over time, and will change depending on factors such as sweat, arm position, and even just biological changes. To deal with this the model can update itself on the device over time as the signal changes. Another part of this research that we appreciate is that all the inferencing, training, and updating happens locally on the AI chip in the armband. There is no need to send data to an external device or the “cloud” for processing, updating, or third-party data mining. Unfortunately the research paper with all the details is behind a paywall.