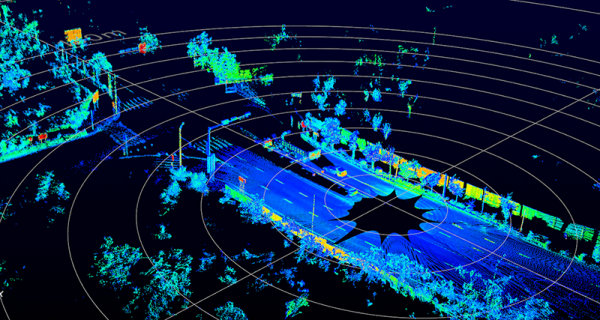

If you’re unfamiliar with LIDAR, you might have noticed it sounds a bit like radar. That’s no accident – LIDAR is a backronym standing for “light detection and ranging”, the word having initially been created as a combination of “light” and “radar”. The average person is most likely to have come into contact with LIDAR at the business end of a police speed trap, but it doesn’t have to be that way. Unruly is the open source LIDAR project you’ve been waiting for all along.

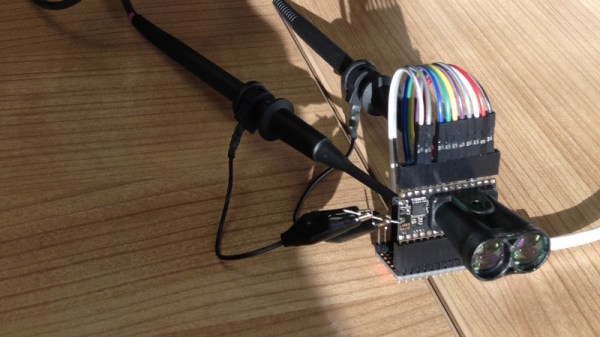

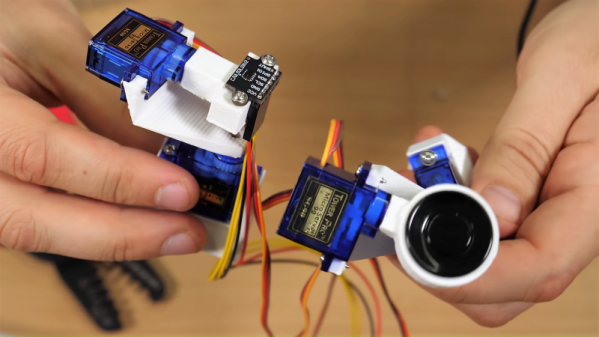

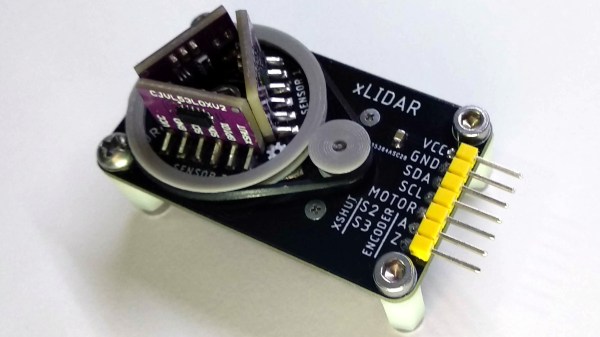

Unlike a lot of starter projects, LIDAR isn’t something you get into with a couple of salvaged LEDs and an Arduino Uno. We’re talking about measuring the time it takes light to travel relatively short distances, so plenty of specialised components are required. There’s a pulsed laser diode, and a special hypersensitive avalanche photodiode that operates at up to 130 V. These are combined with precision lenses and filters to ensure operation at the maximum range possible. Given that light can travel 300,000 km in a second, to get any usable resolution, a microcontroller alone simply isn’t fast enough to cut it here. A specialized time-to-digital converter (TDC) is used to time how long it takes the light pulse to return from a distant object. Unruly’s current usable resolution is somewhere in the ballpark of 10 mm – an impressive feat.

It’s a complicated project, requiring the utmost attention to detail to get any results at all. The team behind Unruly have done a great job of both designing and documenting the project. It’s great to see an open source LIDAR package in the wild, giving hackers more options than just the pre-baked commercial modules on the market. We can’t wait to see where the project goes next.

For more on LIDAR, check out last week’s Hackaday podcast – we cover Unruly, as well as a handful of other standout projects in the field.