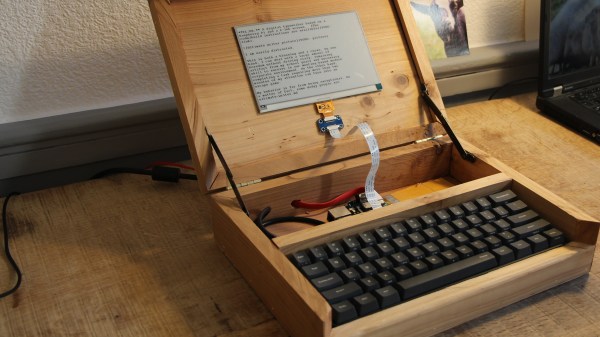

Hacks are often born out of unfortunate circumstances. My unfortunate circumstance was a robbery– the back door of the remodel was kicked in, and a generator was carted off. Once the police report was filed and the door screwed shut, it was time to order cameras. Oh, and record the models and serial numbers of all my tools.

We’re going to use Power over Ethernet (POE) network cameras and a ZoneMinder install. ZoneMinder has a network trigger capability, and we’ll wire some magnetic switches to our network of PXE booting Pis, using those to inform the Zoneminder server of door opening events. Beyond that, many newer cameras support the Open Network Video Interface Forum (ONVIF) protocol and can do onboard motion detection. We’ll use the same script, running on the Pi, to forward those events as well.

Many of you have pointed out that Zoneminder isn’t the only option for open source camera management. MotionEyeOS, Pikrellcam, and Shinobi are all valid options. I’m most familiar with Zoneminder, even interviewing them on FLOSS Weekly, so that’s what I’m using. Perhaps at some point we can revisit this decision, and compare the existing video surveillance systems.

Continue reading “Hack My House: ZoneMinder’s Keeping An Eye On The Place”