Join us on Wednesday, September 29 at noon Pacific for the Robot Dogs Hack Chat with Afreez Gan!

Thanks to the efforts of a couple of large companies, many devoted hobbyists, and some dystopian science fiction, robot dogs have firmly entered the zeitgeist of our “living in the future” world. The quadrupedal platform, with its agility and low center of gravity, is perfect for navigating in the real world, where the terrain is rarely even and unexpected obstacles are to be expected.

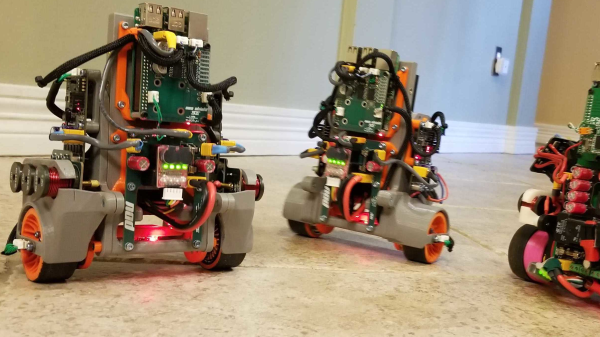

The robot dog has been successful enough that there are commercially available — if prohibitively priced — dogs on the market, doing everything from inspecting factory processes and off-shore oil platforms to dancing for their dinner. All the publicity around robot dogs has fueled a crush of DIY and open-source versions, so that hobbyists can take advantage of what the platform has to offer. And as a result, the design of these dogs has converged somewhat, with elements that provide a common design language for these electromechanical pets.

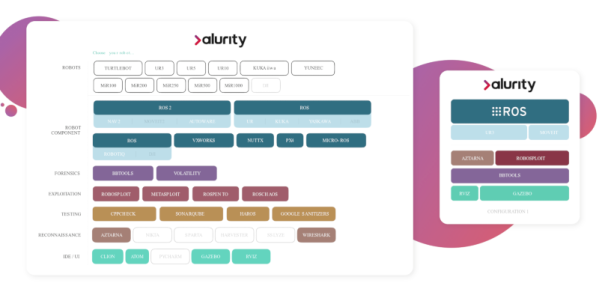

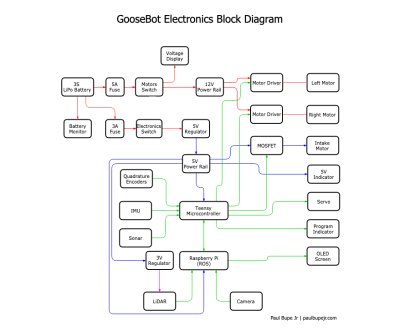

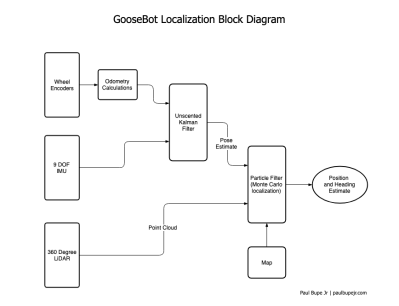

Afreez Gan has been exploring the robot dog space for a while now, and his MiniPupper is generating some interest. He’ll stop by the Hack Chat to talk about MiniPupper specifically and the quadruped platform in general. We’ll talk about what it takes to build your own robot dog, what you can do with one once you’ve built it, and how these bots can play a part in STEM education. Along the way, we’ll touch on ROS, lidar, machine vision with OpenCV, and pretty much anything involved in the care and feeding of your newest electronic pal.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, September 29 at 12:00 PM Pacific time. If time zones have you tied up, we have a handy time zone converter.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, September 29 at 12:00 PM Pacific time. If time zones have you tied up, we have a handy time zone converter.