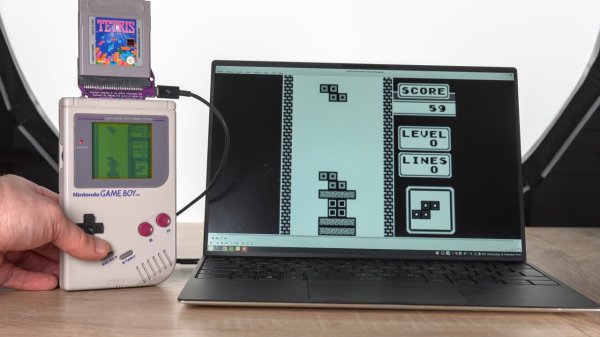

[Sebastian] had a tricky problem to solve. Competitors in a Tetris tournament needed to stream video of their Game Boy screens, but no solution readily existed. For reasons of fairness, emulators were right out, and no modifications could be made to the Game Boys, either. Thus, [Sebastian] created the GB Interceptor, a Game Boy capture cartridge.

Thanks to the design of the Game Boy, there’s plenty of access to useful signals via the cartridge port itself. [Sebastian] realized that a non-invasive capture device could be built to sit in-between the Game Boy and a cart, and send video to a computer. Unfortunately, there’s no direct access to the video RAM via this port, but [Sebastian] figured out a nifty workaround.

The build uses a Raspberry Pi Pico. The chip’s two cores emulate the Game Boy’s CPU and Picture Processing Unit, respectively. Doing this, while having the chips keep up with what’s going on in the Game Boy, required overclocking the Pico to 225 MHz. The system works by capturing data from the cartridge’s memory bus, and follows along with the instructions being run by the Game Boy. By doing this, the Pico is able to populate its own copy of the video RAM. It then spits this out over USB, where it can be displayed and streamed online as desired.

There are some edge-case limitations, but for its intended purpose, the system works great. Currently, the hardware is usable on Linux and Windows, though it does require some fiddling in the latter case. Files are on Github for those eager to build their own. If you simply want to dump carts rather than stream from your Game Boy, we can help there, too. Video after the break.

Continue reading “GB Interceptor Enables Live Screen Capture From Game Boy” →