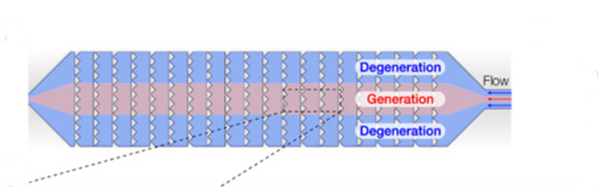

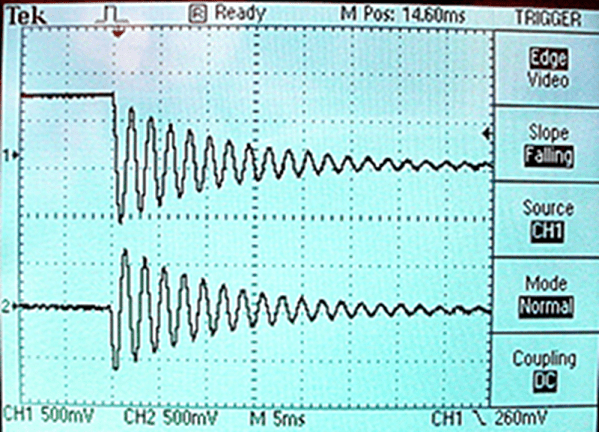

If you are a science fiction fan, you are probably aware of one of the genre’s oddest dichotomies. A lot of science fiction is concerned about if a robot, alien, or whatever is a person. However — sometimes in the same story — finding life is as easy as asking the science officer with a fancy tricorder. If you go to Mars and meet Marvin, it is pretty clear he’s alive, but faced with a bunch of organic molecules, the task is a bit harder. Now it is going to get harder still because Cornell scientists have created a material that has an artificial metabolism and checks quite a few boxes of what we associate with life. You can read the entire paper if you want more detail.

Three of the things people look for to classify something as alive is that it has a metabolism, self-arranges, and reproduces. There are other characteristics, depending on who you ask, but those three are pretty crucial.

Continue reading “Forget Artificial Intelligence; Think Artificial Life”