Sometimes, it’s really useful to watch a project’s parts come together one piece at a time in order to get a complete understanding and mental picture of the whole, and we found that to be the case with this simple, retro-inspired sample game from [ezContents]. (Video, embedded below.) The code is on GitHub but if you’re at all interested in what goes on behind the scenes in a game like that, don’t miss the video.

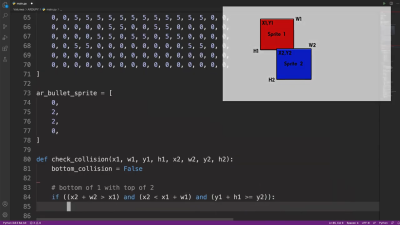

These sprite-based games are mostly about moving a small graphical object (a sprite) around a screen in response to user input, and managing what happens when collisions are detected between the player’s sprite and other sprites like enemies, projectiles, and so forth. The development process is wonderfully documented and demonstrated in a video, as each separate part of functionality gets built and explained one piece at a time.

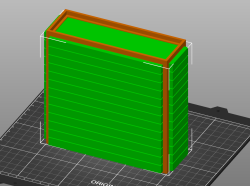

The simple game is made using ArduPy (which is MicroPython combined with Arduino APIs) using Seeed Studios’ Wio Terminal, a small microcontroller development board with integrated screen, sensors, and button inputs including a little directional clicker that [ezContents] uses as a joystick.

The video of the whole process is embedded below; give it a watch and you’ll maybe come away with inspiration, but you’ll definitely have a much better understanding of how these types of games are developed, even if you’re not using the same hardware or development environment.

Continue reading “Simple MicroPython Game Is A 30 Minute Game Dev Course”