Who among us doesn’t procrastinate from time to time? We can’t count the number of times that we’ve taken advantage of the Post Office staying open until midnight on April 15th. And when the 15th falls on a weekend? Two glorious additional days to put off the inevitable!

If you’ve been sitting on submitting your talk or workshop proposal to the 2018 Hackaday Superconference, we’ve got the next best thing for you: we’re extending the deadline until 5 pm PDT on September 10th.

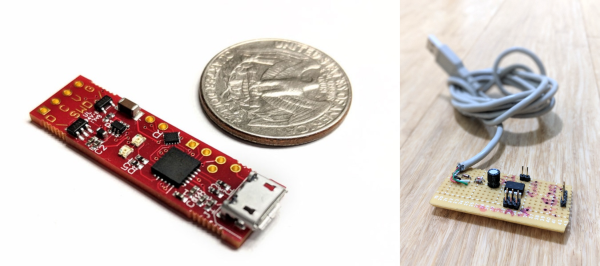

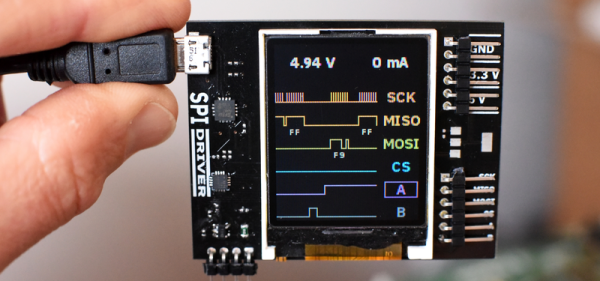

The Hackaday Superconference is a singularity of hardware hackers: more of the best people in the same space at the same time than anywhere else. And that means that your ideas and experiences will be shared with the people most likely to appreciate them. From heroic hacks to creative robotics or untold hardware histories, if there’s a crowd who’ll appreciate how a serial console saved your bacon, it’s this one.

The Hackaday Superconference is a singularity of hardware hackers: more of the best people in the same space at the same time than anywhere else. And that means that your ideas and experiences will be shared with the people most likely to appreciate them. From heroic hacks to creative robotics or untold hardware histories, if there’s a crowd who’ll appreciate how a serial console saved your bacon, it’s this one.

And if you give a talk or workshop, you get in free. But it’s more than that — there’s a different experience of a convention, even a tight-knit and friendly one like Hackaday’s Supercon, when you’re on the other side of the curtain. Come join us! We’d love to hear what you’ve got to say. And now you’ve got a little more time to tell us.

(If you want to get in the old-fashioned way, tickets are still available, but they won’t be once we announce the slate of speakers. You’ve been warned.)